Axioms of Morality and Ethics in Negative Utilitarianism

Christopher Alexander[1], Mathematics Institute, University of Warwick

Abstract

Everyone has some intuition as to what is morally right and what is morally wrong. However, such intuitions can prove inconsistent when questions of morality become increasingly numerical. One of the most consistent methods of determining which of a given set of decisions is the morally correct decision is to use a measure of utility. Such has led to the development of Utilitarianism and subsequently, Negative Utilitarianism.

Unfortunately, despite Negative Utilitarianism solving some of the problems posed by Utilitarianism, it introduces additional problems and this article will propose a set of principles or axioms that resolve these new problems.

The method of derivation involves considering possible distributions of utility over a sentient population. The proposed axioms will be used in an algorithmic fashion to gauge which decisions are to be considered optimal or moral.

The axioms have been designed, in theory, to cover all scenarios of morality and ethics, specifically taking into account: selflessness, the disparity between individual and collective utility, equality over a population and over time, population size and even scenarios involving abortion. The proposed system of axioms has also been designed to accommodate differing intuitions, adding flexibility without compromising the mathematical structure of the system.

Keywords: Axiom, Distribution, Ethics, Intuition, Moral, Morality, Negative Utilitarianism.

Introduction

The subject matter of 'Morality and Ethics' has been debated by mankind for thousands of years. It has shaped our world in almost every conceivable way, from the forming of major world religions to defining systems of law and trade; it has led men and women to commit some of the most appalling atrocities and yet produce some of the most beautiful wonders. Such a subject plays a subtle role in every aspect of our lives: it forms friendships, it is integral to our social structures and is the difference between living a life of tremendous wealth and a life of unconditional generosity. This subject offers some people a greater depth into the very definition of what it means to live, to die and to be human. With such a subject yielding so much influence over our lives, whether directly or indirectly, understanding its mechanics may prove invaluable to us all.

This article will propose a significant extension to the moral theory of Negative Utilitarianism in order to incorporate a greater number of morally relevant factors and avoid some of its unintuitive consequences. The desired result of this extension will be to provide a more complete and intuitive moral theory than that which is offered by Negative Utilitarianism. More details on the moral theory of Negative Utilitarianism are given in Appendix A. This article will contain aspects of opinion and intuition that may not be shared by the reader although the ethical system proposed here can be adapted to incorporate such differences.

When trying to justify a particular argument or point of view, one must start with a set of statements that are agreed upon by both parties; in Physics these may be the laws of nature, like the conservation of momentum for example. Such laws cannot be proved to be absolutely true; they are true only to the extent of experimental confirmation. In Mathematics, axioms are used, which are statements that are accepted to be true without proof; an example is -1 x -1 = 1, this cannot be proved, it is just defined this way. The system proposed in this article will use axioms of moral intuition as the basis for its justification, and as a result, a basis for a complete theory of morality and ethics.

In addition to a set of axioms, this theory will use two assumptions shared by many, if not all utilitarian theories. The first assumption is that the only factors that are considered 'morally relevant' in the field of morality and ethics are the utility/disutility of a sentient population and the distribution of this utility/disutility. The second assumption is that the large and rather complex array of emotions and senses experienced by any sentient being can be combined into a single state at any given time.

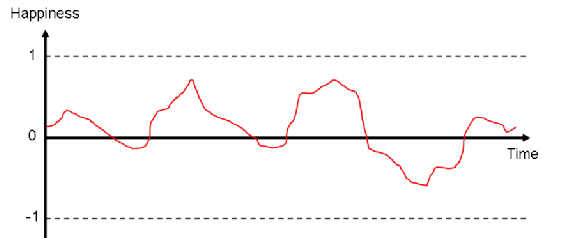

This state will take a value between 1 and -1 with 1 representing the maximum state of wellbeing of the sentient being at a given time and -1 representing the minimum state of wellbeing. And so any state in between is a fraction of such states, with 0 referring to a state of complete neutrality. This idea of state is what will be referred to as happiness, with negative values representing negative happiness, which will be referred to as suffering. The type of utility used in this moral theory will be the happiness-second, that is, a certain state of happiness experienced over a certain interval of time; in this case, constantly experiencing the most favourable state of wellbeing over the interval of a second.

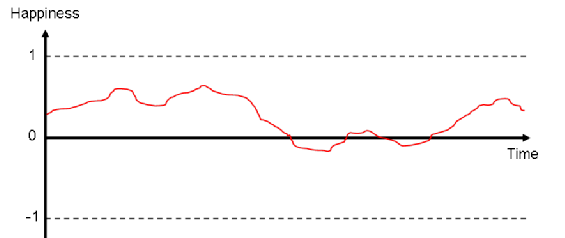

Figure 1: A representation of a possible distribution of happiness over an interval of time.

The utility of this distribution is the quantity represented as the area bounded between the red line and the time axis, with areas below the time axis representing the quantity of negative happiness-seconds. The total utility over this measure of time is the sum of the areas above the time axis minus the areas below it (both bounded by the red line).

A negative measure of utility is also referred to as a disutility. Furthermore, any living organism that has the biological and psychological capacity to experience positive or negative measures of happiness will be referred to as a conscious living organism, or CLO for short. How such a definition of happiness can be physically measured is beyond the scope of this article, but a possibility is provided in Appendix B.

Defining a universe of discourse

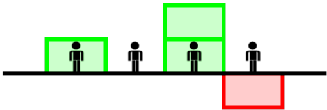

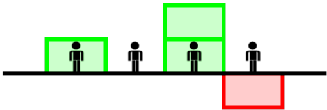

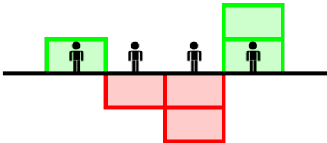

To have a complete moral theory, one needs an ethical system that can decide which of a given set of decisions is the optimal or moral decision(s). By using the two assumptions discussed previously, such decisions can be fully represented as distributions of utility and disutility with all other factors dismissed as either morally irrelevant or indirectly contributing to the distribution of this utility. That is, when a decision is considered, the effect of this decision on a collection of CLOs can be represented by the following type of diagram:

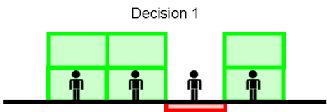

The CLOs are represented along the horizontal axis with the magnitude of utility represented by the vertical axis. Here, the CLOs are human and so are represented by stickmen. The blocks above the black line represent positive units of utility and the blocks below the black line represent negative units of utility or units of disutility (both are the same). Each block represents the quantity of a happiness-year (this is because decisions are likely to be encountered on the macro level). There is no particular reason why the happiness-years need to be in discrete quantities or that the CLOs be human but for the remainder of this article the diagrams will be represented as such purely for illustrative purposes. So for this particular decision, the outcome is predicted to result in one happiness-year for the 1st (left-most) CLO, the neutral state for the 2nd CLO, two happiness-years for the 3rd CLO and a negative happiness-year for the 4th CLO. The diagram does not represent the change in utility as a result of a decision, as doing so would require the system to set a default decision outcome, so instead, the diagram represents the total utility each CLO will experience from the time the decision is considered to the time each of the CLOs cease to exist. The diagram will be referred to as a decision outcome and represents all the morally relevant information that needs to be known about the decision.

It should be stated that as part of the definition of happiness, before a living organism becomes classified as a CLO – such as at birth – and after a CLO has died, the state this CLO is at is the neutral state. And so after a CLO has died, the 'decision maker' need not predict the effect of their decision on that CLO as the utility will be unaltered after such a point. Ideally, the decision maker will be able to predict the effect of their decision on every CLO in existence with flawless accuracy. This is impossible, so approximations will have to be made. One important approximation, which in practice will be indispensable, is not to consider the effect of the decision on every CLO in existence, but a small, local subset. When a set of decisions are considered, each decision must include all of the CLOs of every other decision, whether such CLOs are directly affected by that decision or not. This is so the decisions can be compared equally, with the same CLOs on the horizontal axes.

An example: Which of the following decision outcomes represents the moral decision(s)?

The ethical system proposed in this article must be capable of quantitatively comparing decision outcomes, like the above, but across any number of CLOs. Such a task will require a fair amount of theoretical machinery.

Designing a machinery

The ethical system proposed in this article will be derived in this section through defining a number of axioms that will be used to select the moral decision(s) from a given set of decisions. Where a contradiction occurs, axioms will have to be given a priority of application, turning the system into a form of algorithm.

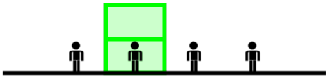

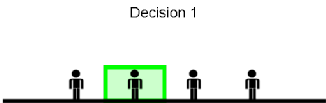

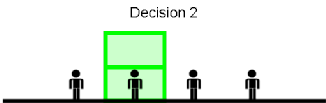

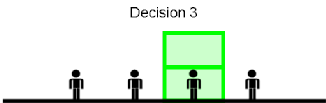

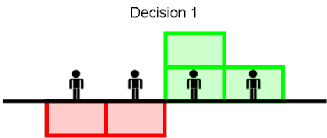

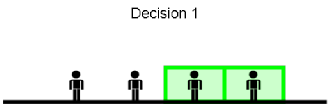

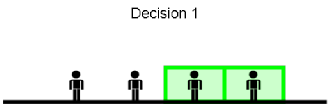

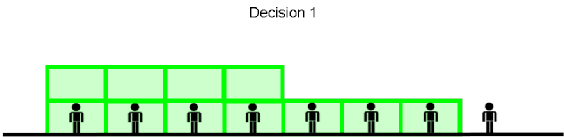

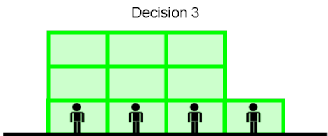

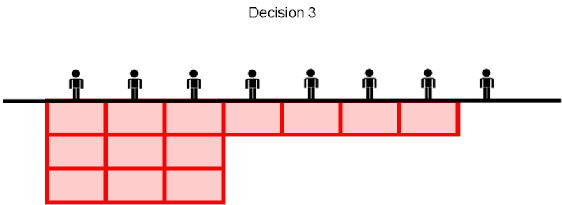

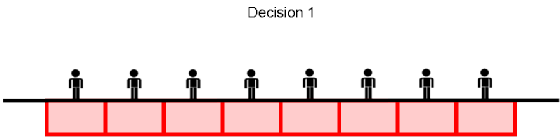

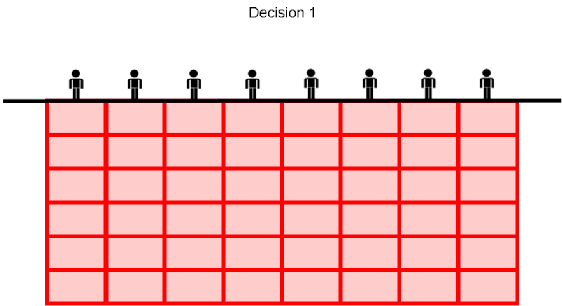

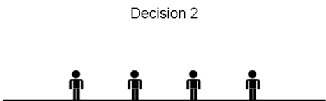

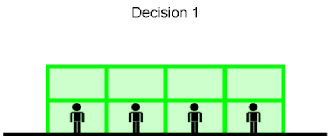

Consider the following decisions; which is the moral decision(s)?

Intuitively, Decisions 2 and 3 would be the moral decisions, that is, the decisions that have the greatest total utility when summed over all CLOs. This intuition will form the first axiom of the ethical system, namely the Axiom of Utilitarianism. Decisions, such as 2 and 3, that have the same distribution of utility but for different CLOs will be called equivalent decisions.

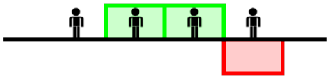

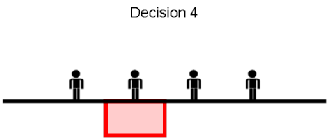

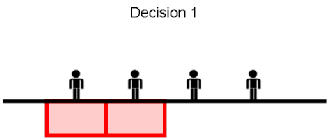

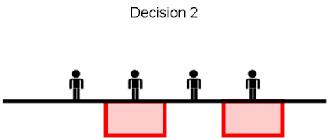

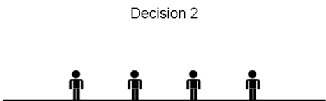

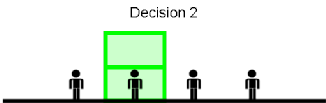

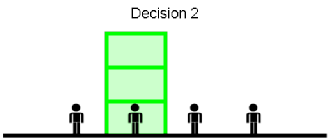

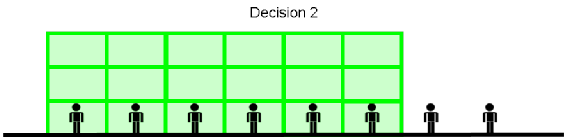

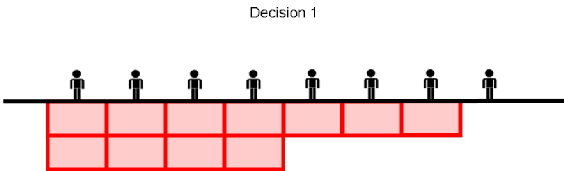

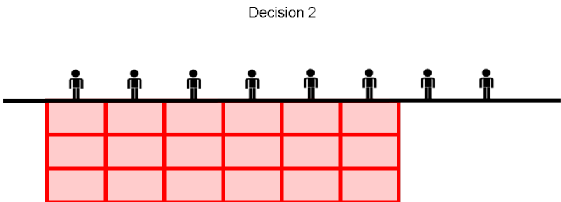

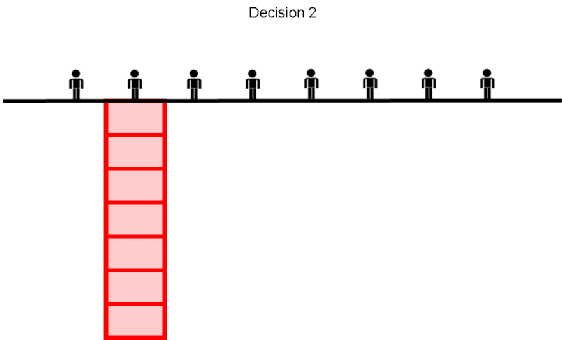

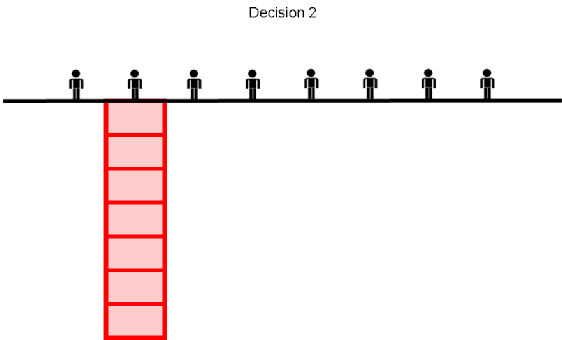

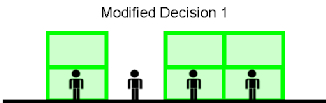

Consider the following decisions; which is the moral decision(s)?

Decision 1 will be considered to be the moral decision as this results in less disutility when summed over all CLOs. This will be another axiom of the ethical system, namely the Axiom of Negative Utilitarianism. In Utilitarianism, disutility is counted as a negative measure of utility. However, in Negative Utilitarianism, utility is not counted as a negative measure of disutility. For example, using just the Axiom of Negative Utilitarianism, the following decisions are both moral:

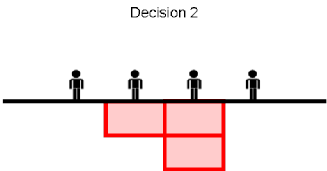

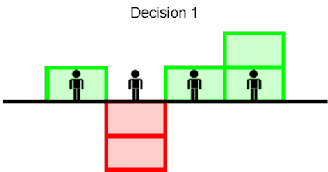

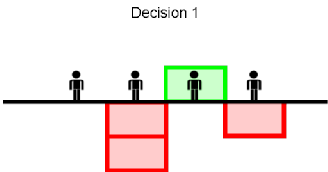

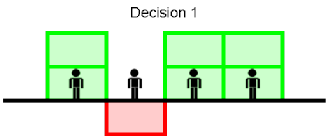

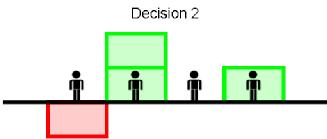

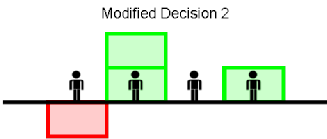

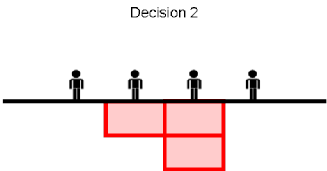

Two problems with the moral theory of Negative Utilitarianism (and indeed other utilitarian theories) include the Pinprick Argument and the Repugnant Conclusion; both of these problems will be avoided by the introduction of some axioms later on in the article, although how these axioms achieve this will not be discussed here. For the reader unfamiliar with such problems, see Ord (2013) and Arrhenius et al. (2014) respectively. So far the ethical system is formally based on two axioms, to increase happiness-years and to reduce negative happiness-years. Essentially, 'increase happiness' and 'reduce suffering'; this is intuitive, but consider now the following decisions:

Decision 1 has a total utility of 2 happiness-years but also a total disutility of -2 happiness-years. Now Decision 2 has a reduced total utility but it also has a reduced total disutility. So using both previous axioms together would result in a contradiction. The solution is to prioritise one axiom over the other. The axiom that this system will prioritise is the Axiom of Negative Utilitarianism. The reason for this is roughly because no amount of suffering could ever justify an increase in positive happiness for the remaining CLOs and in addition, that positive happiness can be thought of as a luxury to possess whereas suffering has a priority to be reduced; see Smart (1958). The downside of this selection is that there will be situations in which even an infinitesimally small amount of suffering can influence the difference between a distribution with a lot of utility and a distribution with very little, for example:

In this case, Decision 2 will be favoured by the ethical system and will always be favoured no matter how much utility is added to Decision 1 (to all CLOs except the second to the right). This argument forms part of the Pinprick Argument and is used in opposition to Negative Utilitarianism, as is clearly described in Ord (2013).

Consider now two distributions of utility over the lifespan of a single CLO:

Figure 2: A distribution of utility that results in some intervals of disutility.

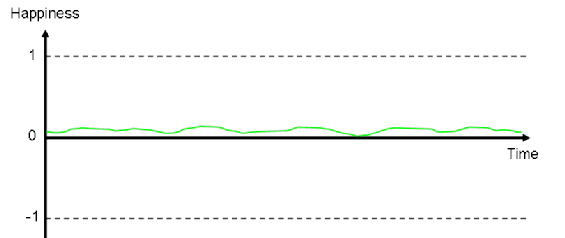

Figure 3: A distribution of utility in which no disutility is experienced.

Figure 2 has a greater total utility than Figure 3 but also has greater disutility. Negative Utilitarianism would favour Figure 3, although for a single CLO, this seems morally unintuitive. This is because most people, if not the entire human population, will at some point in their lives undergo short-term suffering in order to maximise their long-term happiness. Revising for examinations is a clear example. So it seems that, given the choice to select the morally favourable outcome for a single CLO, total utility is favoured most. This will be called the Axiom of Inconsequence and allows this system to avoid part of the Pinprick Argument. This axiom is not in contradiction to the two previous axioms as this axiom is concerned only with the distribution of utility of a single CLO, rather than the collection of CLOs. The axiom is named as such as it means Figures 2 and 3 can be summarised by a single value, that is, the total utility. Such modification simplifies this ethical system significantly.

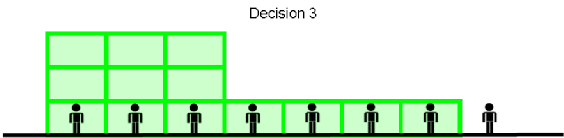

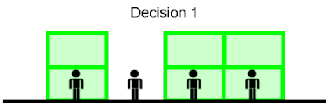

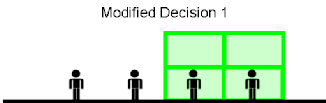

Consider the following decisions; which is the moral decision(s)?

It would seem intuitive that Decision 1 is the moral decision as the utility here is distributed evenly. Such intuition possibly arises because of the natural desire for fairness and equality. But consider then the following example:

Now this situation may seem less morally intuitive because if the system favours Decision 1 and prioritises equality over total utility, then to be consistent, the second to the left CLO in Decision 2 could be given 10, 100 or even a thousand times more utility than the total utility in Decision 1. But if the system favours Decision 2 then it is possible to end up with a situation in which one CLO is favoured to experience 101 happiness-years (with the other CLOs in the neutral state) over 100 CLOs each experiencing a single happiness-year. This system will prioritise equality (Decision 1) over total utility (Decision 2), but it is worth stating again that the reader is not expected to replicate this intuition (or indeed the intuition for any of the axioms). However, the precise meaning of equality has not been stated, as the following example will demonstrate.

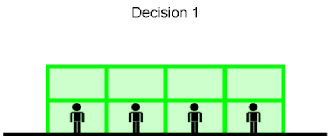

Consider the following decisions; which is most equal and hence, the moral decision?

Without a precise definition of equality it can be difficult to use one's intuitions to decide what is regarded as fair and what is not. The reader is encouraged to ponder this particular problem prior to reading the convention that will be adopted by this system:

- Select all decision outcomes that predict the most CLOs to be on a strictly positive (not negative or neutral) happiness-year value.

- The CLOs predicted to be on a strictly positive happiness-year value will be called spCLOs.

- Of the selected decisions, now select all decisions in which the happiness-year value of the spCLO with the smallest predicted happiness-year value is largest.

- Of the remaining selected decisions, there will be at least one CLO of which its happiness-year value is the same across all such decision outcomes. Such CLOs will be called eCLOs.

- Consider the remaining selected decisions without including the eCLOs and repeat Step 1.

This procedure will be applied to the example above.

- Decisions 1 and 3 predict 7 CLOs to have a strictly positive happiness-year value but Decision 2 only predicts this for 6 CLOs. So Decision 2 is discarded.

- The spCLOs in Decisions 1 and 3 are all but the last (right-most) CLO.

- The smallest predicted happiness-year value of all spCLOs in Decision 1 is one happiness-year. The smallest predicted happiness-year value of all spCLOs in Decision 3 is also one happiness-year. So both Decisions 1 and 3 are selected.

- The eCLOs of Decisions 1 and 3 are the CLOs in positions 5 to 8 (1 being the left most position).

- The decision outcomes now become:

So by repeating Step 1, it is clear that Decision 1 is most equal (by the definition given by the procedure) and hence is the moral decision.

This procedure will form an axiom, namely the Axiom of Positive Equality.

However, there has been no mention of negative happiness-years in this definition of equality; this is because they are irrelevant for this particular axiom. An identical axiom will be defined but for decision outcomes involving negative happiness-years (the procedure is identical, just flip the decision outcomes about the horizontal axis). This is the Axiom of Negative Equality. The reader should notice that both axioms of equality and the axioms of Utilitarianism and Negative Utilitarianism are all in contradiction with one another, so the system must now prioritise all axioms in relation to one another (this means, should the axiom with highest priority axiom be unable to decide between a reduced set of decisions, the axiom with a priority below it is then the deciding axiom). But first, consider an example outlining how the Axiom of Negative Equality is applied.

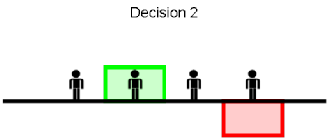

Consider the following decisions; which is most equal and hence, the moral decision?

As stated previously, the procedure is the same as for the Axiom of Positive Equality except the decision outcomes must first be flipped about the horizontal axis. The reader should notice that flipping the decision outcomes would yield the same situation as before and hence Decision 1 is considered most equal.

The system will make the following priorities:

- The Axiom of Inconsequence

- The Axiom of Negative Utilitarianism

- The Axiom of Negative Equality

- The Axiom of Positive Equality

- The Axiom of Utilitarianism

The priority of (1) over the rest is because it is involved with setting up the decision outcomes (the axioms to come will also be of this form). The priority of (2) and (3) over (4) and (5) is due to suffering being regarded as so much more of a moral priority that even the distribution of negative happiness-years overrides increasing utility. The priority of (4) over (5) is a direct result of how equality is defined by this system. The most difficult justification is the priority of (2) over (3). The reader is strongly recommended to spend a good portion of time contemplating such priorities as they form a critical part of the mechanics of this system. As for the justification of (2) over (3), the following example will show how this may seem morally unintuitive:

Now such a priority leads to Decision 2 being considered moral. This clearly seems unfair at first glance as it directly opposes the general principle used in the derivation of the Axiom of Positive Equality. However, unlike happiness, suffering is not so insignificant in comparison when it comes to quantity. The only justification for allowing such a selection is to think of suffering as so universally undesirable, that its distribution is insignificant in comparison to its quantity and sharing such a burden can only be done without the expense of increasing the burden overall. It must be stated that the above situation is probably the largest theoretical dilemma with this system but the priority of (2) over (3) may seem intuitively indispensable when considering the following set of decisions:

Given the current priority of the axioms, Decision 2 is the moral decision but this would not be the case if (3) had priority over (2).

There are still four more factors that need to be incorporated into this system before it is complete: selflessness, past history, population and abortion. Such factors do not alter the mechanics of the selection process; instead they influence how the decision outcomes should be represented, and because of this, they are given priority over (2), (3), (4) and (5). However, such factors can still mean the difference between selecting a decision completely different to one selected using only the previous axioms and so they form a critical component of this ethical system nonetheless.

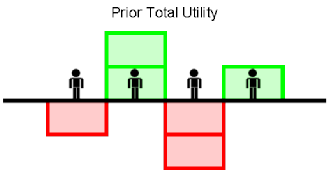

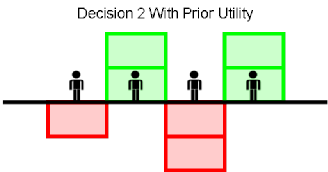

In calculation of the decision outcomes thus far, the definition was to calculate the predicted utility from the moment the decisions were available to the moment each CLO had ceased to exist (clearly very rough approximations will be required). But it is possible to imagine situations where the history of the CLO warrants a greater share of future utility. Consider the total utility of the following CLOs prior to evaluating the decision outcomes:

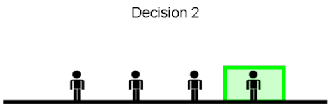

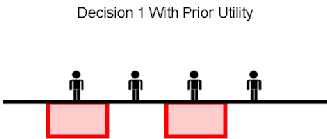

Given this information, which of the following decisions is the moral decision(s)?

Without considering past history it would clearly seem Decision 2 is the moral decision. However, summing the utility, from the total utility prior to the decision, to each of the decision outcomes yields the following distribution (but this time predicting total utility across the entire life span of all the CLOs involved):

Applying the previous axioms now yields Decision 1 to be the moral decision. It may seem counter-intuitive at first to cause suffering to a CLO that has experienced a lot of prior happiness in order to give a small amount of happiness to a CLO that has suffered. The system in this case is demanding that what is morally important is the total utility at the end of a CLO's life. The most convincing argument in support of this is that due to the mechanics of this new axiom, it will only be possible to cause suffering up to a certain point and that it justifies scenarios of causing the happy to be less happy in order to reduce the suffering of those who have suffered much before. This axiom is creatively named the Axiom of Prior Utility.

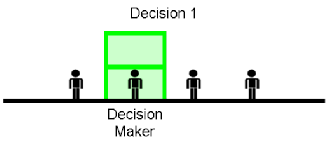

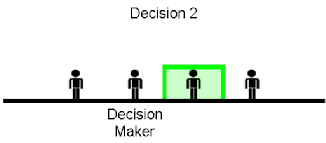

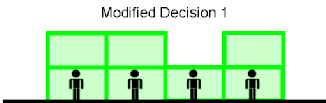

The next axiom to be considered is the Axiom of Selflessness. When considering decision outcomes in which the decision maker is one of the CLOs, the decision maker must consider the decision outcomes without the CLO representing the decision maker. So in the following example, Decision 2 is considered to be the moral decision.

Clearly there will be situations where the decision maker may suffer significantly for the benefit of another CLO; this makes this axiom – and hence the ethical system as a whole – a very difficult one to follow completely. However, some may find such an axiom leads to a very warm and socially applicable morality.

But what about the selflessness of other CLOs? Surely if the decision maker is able to communicate with the considered CLOs, such CLOs should be able to request a decision be made with less or no regard to their predicted utility. This system will include such communication, directly or indirectly. However, the decision maker is not required to honour such requests. The mechanics of this idea are quite simple: each CLO can opt to have their predicted utility artificially increased by a positive value of happiness-years; and this value must be summed to all decision outcomes. By adding this value, such a CLO is able to influence the selection of a decision that is less favourable to that CLO. It is however, mathematically impossible for a CLO to abuse this axiom to their benefit (in terms of utility) but the proof of such has been omitted from this article due to its length. This axiom will be called the Axiom of Communicated Selflessness.

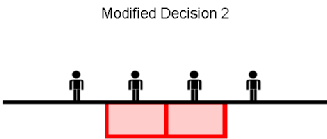

Consider the following decisions:

Now suppose the CLO second from the left decides to opt for a selflessness value of one happiness-year, then the previous decisions should be considered as follows:

So the selfless nature of this CLO has resulted in Decision 1 being considered the moral decision. As a result, this CLO is predicted to suffer by one happiness-year in order that the other CLOs each enjoy two happiness-years. The use of this axiom in this system allows selfless behaviour to be acknowledged as moral. Both the Axiom of Selflessness and the Axiom of Communicated Selflessness help to avoid the Pinprick Argument as it is likely a CLO will opt to suffer a small amount if such results in an overwhelming increase of positive happiness-years for their fellow CLOs.

The next axiom enables this ethical system to avoid the consequences of the Repugnant Conclusion and the reader would benefit from reading the introduction to Arrhenius et al. (2014). This axiom is concerned with the ethics of creating new CLOs which, in the human case, means having children. The moral intuition used is that it is morally impartial to create a new CLO providing such a CLO is predicted to end their existence with a positive total utility and that it is intuitively immoral to create a CLO that is predicted to have a negative total utility by the end of its existence. However, such a decision should also depend on the effect such creation will have on the current population of CLOs and this will be incorporated by the following procedure:

- If a CLO is created as a result of any one of the decisions it must be represented on all decision outcomes. Such CLOs will be called nCLOs.

- If a decision includes an nCLO that is predicted to have a strictly positive total utility then that decision is to be modified by setting the predicted total utility of that nCLO to be neutral.

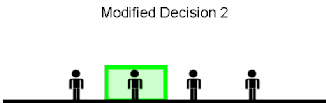

An example of this procedure is given below:

Now if the leftmost CLO is created as a result of either decision (in this case by both as otherwise their predicted total utility would be represented as neutral for one of the decisions) then the decisions will be modified in the following way:

This axiom will be called the Axiom of Creation and it directly implies that the production of utility is not what is favoured in this system – what is favoured is the increase of the total utility of the CLOs that currently exist along with the reduction of negative total utility of future generations.

The final axiom to be considered is the Axiom of Potential Consciousness and this axiom is directly concerned with the moral value attached to a living organism (LO) that is predicted to grow into a CLO. And so this axiom, along with the Axiom of Creation, is directly related to the morality of abortion. It is quite clear that such a topic has found much disagreement and the reader is not expected to follow the particular intuition given here. This axiom can be easily modified to adopt either pro-life or pro-choice stances accordingly. The stance taken by this system is an intermediate one and it defines the moral value of a LO in development of becoming a CLO to be equal to that of another CLO multiplied by the probability that the LO will successfully grow to become a CLO. The mechanics of this can be applied as follows:

- If a LO is in development to become a CLO then represent this LO as a CLO on all decision outcomes, including its predicted total utility.

- Modify each decision outcome by multiplying the predicted total utility of all LOs by the probability that such LOs will survive development to becoming CLOs.

An example of this procedure is given below:

Now if the second CLO from the right is actually a LO with probability of survival 0.5 then the decisions will be modified in the following way:

And thus concludes the derivation of the axioms. The priorities of the last 5 axioms will now be incorporated as follows:

- The Axiom of Inconsequence

- The Axiom of Prior Utility

- The Axiom of Selflessness

- The Axiom of Communicated Selflessness

- The Axiom of Potential Consciousness

- The Axiom of Creation

- The Axiom of Negative Utilitarianism

- The Axiom of Negative Equality

- The Axiom of Positive Equality

- The Axiom of Utilitarianism

Some priorities are not particularly vital but are useful purely for computational purposes.

So given any set of decision outcomes, this ethical system can now determine which decision(s) are considered moral and which are considered immoral. This is done by modifying and discarding decision outcomes by applying the axioms in their respective orders. It can be proved that all decisions that are considered moral in a given set of decision outcomes are equivalent, but such will not be shown in this article. And so the moral decisions are unique (up to equivalence) when all the axioms have been applied, this makes it possible to speak of the moral decision. The ethical system is now complete.

Closing remarks

The proposed system does have a number of drawbacks, one of which is the vast amount of computation required to select the moral decision in even the simplest of situations. Such will make the system difficult to put into practice (although arguably not much more so than other popular utilitarian theories). Another problem is the subjective nature of the axioms and utility that is employed; unlike in Mathematics where the axioms are more or less agreed upon, the ethical axioms proposed in this article will probably find disagreement.

On the other hand, the system is adaptable, in the respect that if there is little information available or little time to input the information, then approximations can be made and the moral decision can be quicker to calculate. If time or computation is not an issue, the system can incorporate all the information at hand and guarantee a solution to even the most complicated of moral dilemmas; and the more information that is given, the greater the accuracy in selecting the moral decision. The system may receive criticism due to its complexity, but such a system is arguably one of the simplest systems that can be constructed which incorporates all the moral factors highlighted in this article.

The greatest strength of this system, however, is its bottom-up approach to modelling morality and ethics. Such an approach can have immediate applications to Medical Ethics (Gandjour et al., 2003) and to Disaster Relief Prioritisation, but most prominently, to Artificial Intelligence. When an ethical framework is to be programmed it will need to be roughly consistent with the general intuitions of modern society as well as having an algorithmic structure. Current ethical systems cannot do this (Shulman et al., 2009), but the system proposed in this article may satisfy such requirements. A few natural extensions to this article would be to research how artificially intelligent systems can be programmed with a socially intuitive form of morality, to what extent the general population agrees with such axioms and a thorough strategy for deriving consequences and applications of the axioms.

Above all, I hope the reader will be able to exploit the proposed system in some way, whether by using the general structure to support and simplify their stance on morality, by improving the consistency with which their ethical decisions are justified or even following the system as given and leading a life consistent with the ideals of Effective Altruism.

Acknowledgements

I would like to thank the following CLOs for their help with, and feedback for, the previous versions of this research: Brian Tomasik, Emmanuel Aroge, Frances Lasok, Gabriel Harper, Gil Rutter, Mathew Billson, Nathan Price, Nikola Yurukov, Rebecca Lindley and Tomi Francis.

List of figures

Figure 1: A representation of a possible distribution of happiness over an interval of time.

Figure 2: A distribution of utility that results in some intervals of disutility.

Figure 3: A distribution of utility in which no disutility is experienced.

Appendices

Appendix A

To best explain the moral theory of Negative Utilitarianism, the motivation and reasoning for its development will be introduced beforehand.

Consider two high-rise buildings, A and B, both filled with a finite set of items and living creatures, including people. Suppose now that both buildings were simultaneously set alight in some act of violence, to which the local fire brigade responds. Upon arriving, the chief firefighter makes two disheartening observations, that the items inside each building are barricaded in and that the brigade only commands enough water to extinguish just one of the two fires. Hence, one of the buildings, and all its contents, will unavoidably burn to the ground. The chief firefighter does, however, have a list of all the items in both buildings during the time of the fire; given this information, which building should be saved? A good moral theory will be one that can answer this question and do so for every possible pair of given sets. To answer such questions would require a currency, or what is commonly referred to as a utility for each element of the sets.

If the choice of utility is simply the number of people surviving, then factors such as age, wellbeing and even the presence of animals will all be dismissed as morally irrelevant. Would saving a child take priority over an elder? Would saving a dog matter? And would saving someone in a permanent state of agony even be of any help?

The utility that will be used in this article and which is similar to other utilitarian theories is based solely on happiness and suffering. If one continues the question of 'why?' in many trivial questions of morality, eventually one will probably end up with the statements: happiness is favourable and suffering is unfavourable. Here is an example:

Why should I not push Tom off a tall ladder?

Because this may cause Tom to fall and break some of his bones.

Why should I not break some of Tom's bones?

Because breaking Tom's bones will give Tom a lot of pain and possibly a disability.

Why should I not give Tom a lot of pain and possibly a disability?

Because this causes Tom to suffer and suffering is unfavourable.

Now if asked 'why is suffering unfavourable?' there could be no answer other than that suffering being unfavourable is considered a self-evident statement. Such a statement is an example of an axiom and this is explained in paragraph three of the introduction.

But then what about more complicated examples, questions involving freedom, honesty and human rights? Should such a utility not involve these measures as well? Many utilitarian theories have used the assumption that these more complicated structures are not to be included as they can be justified on the basis of promoting happiness and reducing suffering (so they are less fundamental).

For example, a population that is generally more honest than another may be significantly happier than the other population and so honesty is then favourable because it indirectly contributes to an increase in happiness. There are no examples where (when all other factors are fixed) a greater amount of happiness is less morally favourable (due to it being an axiom); however, there are plenty of examples where honesty is regarded as morally unfavourable. Would one lie to save an innocent life? More precisely, most utilitarian theories have used the assumption that, fundamentally, all questions of morality and ethics can be reduced to considering the happiness and the suffering of sentient beings. Now such is quite an assumption but is one that will not be justified further in this article (as such could make up the content of a whole book). Therefore, the reader is encouraged to spend a good portion of time convincing themselves of this conclusion. Here is an example:

Why should I not push Tom off a tall ladder?

Because Tom has a right to his personal safety.

Why does Tom have a right to his personal safety?

Because giving people like Tom such rights will, on average, promote greater happiness and reduce suffering in a population.

It may be plausible to use rights as the basis for a moral theory but an alert reader will notice that either the right to personal privacy or the right to personal security will be breached if, as an example, the use of CCTV cameras is enforced but also if it is not enforced. So using rights as the means of a moral theory has some clear problems. Even though happiness and suffering are more fundamental measures of a utility, measures like freedom and honesty can still be held in high regard as they are good approximations for the promotion of happiness and the reduction of suffering. But making the use of honesty an ethical axiom (so essentially never lying) has clear problems, as discussed earlier. The same argument can be extended to freedom and any other conceivable measure, other than the measures of happiness and suffering. This is the essence of the assumption used by many utilitarian theories.

So how can happiness and suffering be applied to the burning buildings scenario? How much 'happiness value' is a child given in comparison to an adult, or to a dog? Such questions do not make sense without introducing the units of the utility. And such units are introduced from paragraph four of the introduction to the start of the next chapter; the reader should familiarise themselves with this before continuing here.

So now it is numerically possible to compare two given scenarios, for example, which of the following is favoured most?

- For a sentient being to be at a 0.4 state of happiness for 5 minutes and then a -0.1 state for a further 5 minutes.

- For a sentient being to be at a 0.2 state of happiness for 10 minutes.

Well, if the axiom that greater utility is favoured most is adopted then the second option is favoured most as it yields a total utility of 120 happiness-seconds in comparison to only 90 happiness-seconds for the first option.

Returning to the burning buildings scenario, it is now possible to convert every item in each building into a quantity of utility.

For humans, saving a person's life will result in them going on and living, on average, a life in which they experience much utility.

A child has many more years to live than an elder and so will experience, on average, a greater quantity of utility.

A dog has a lesser sentience (so may only experience happiness on a range of -0.1 to 0.1 for example) and a shorter life span, so will experience, on average, less utility than a human.

Any CLO in an indefinite state of agony would go on to live a life full of much disutility.

Items with monetary value may be used to generate utility indirectly.

So one solution to the burning building problem is to save the building that will lead to the situation in which overall utility is maximised. The moral theory whereby utility should be maximised is known as Utilitarianism, and such a theory still remains popular amongst moral philosophers to this day. However, Utilitarianism supports some rather unintuitive ideas; as total utility is all that is important in this theory, what is to stop someone causing suffering to someone else if overall, the person causing the suffering gets a greater amount of happiness from causing the suffering than the person receiving the suffering? There would be nothing to stop such behaviour, and in fact, Utilitarianism actively encourages such actions.

It is clear that using a utility is a favourable characteristic of any moral theory as it enables problems in morality and ethics to be compared numerically; many would argue it is an indispensable aspect of any moral theory. An alternative moral theory to Utilitarianism is Negative Utilitarianism, a utilitarian theory whereby instead of increasing total utility, one should instead be concerned with reducing disutility; see Smart (1958). Such a theory seems more intuitive but comes with its own criticisms and these have been highlighted (although not discussed) in this article.

Appendix B

One possibility of defining happiness could be done through biochemical means. For example, happiness could be defined as the interaction of certain hormones with a chemoreceptor, with some hormones corresponding to a positive wellbeing and others to a negative wellbeing. Then a measure of happiness could be the concentration of positive hormones in relation to the concentration of the negative hormones.

Regarding the psychological state of wellbeing, this model could use the idea that one's thoughts and psychological state do not influence the happiness state directly, instead they influence the production of particular hormones. Happy thoughts may be triggered by a high concentration of positive hormones as well as positive hormones being produced as a result of happy thoughts. But such is simply a hypothetical example.

Notes

[1] Christopher Alexander has just entered his third year as an undergraduate student at the University of Warwick. He is pursuing a Master's degree in Mathematics and is expected to graduate in 2016. His research was presented at the BCUR 2014 and on receiving the Monash-Warwick Alliance Travel Fellowship, was presented at Monash University for the ICUR 2014.

References

Arrhenius, G., J. Ryberg, T. Tännsjö and N. Zalta (eds) (2014), 'The Repugnant Conclusion', The Stanford Encyclopedia of Philosophy, Spring 2014 edition, available at http://plato.stanford.edu/archives/spr2014/entries/repugnant-conclusion, accessed 10 August 2014

Gandjour, A. and K. W. Lauterbach (2003), 'Utilitarian Theories Reconsidered: Common Misconceptions, More Recent Developments, and Health Policy Implications', Health Care Analysis, 11 (3), 229-44

Ord, T. (2013), 'Why I'm Not a Negative Utilitarian', available at http://www.amirrorclear.net/academic/ideas/negative-utilitarianism, accessed 10 August 2014

Shulman, C., H. Jonsson and N. Tarleton (2009), 'Which Consequentialism? Machine Ethics and Moral Divergence', Proceedings of the Fifth Asia-Pacific Computing and Philosophy Conference (APCAP), Tokyo: University of Tokyo, pp. 23-25

Smart, R. N. (1958), 'Negative Utilitarianism', Mind, 67, 542–43

To cite this paper please use the following details: Alexander, C. (2014), 'Axioms of Morality and Ethics in Negative Utilitarianism', Reinvention: an International Journal of Undergraduate Research, BCUR 2014 Special Issue, http://www.warwick.ac.uk/reinventionjournal/issues/bcur2014specialissue/alexander/. Date accessed [insert date]. If you cite this article or use it in any teaching or other related activities please let us know by e-mailing us at Reinventionjournal at warwick dot ac dot uk.