SIP Lab - Code & Data

Home |

People |

Projects |

Publications |

Code & Data |

Internal |

Code |

WNMF dataset |

CityWalks dataset |

IMAC dataset |

TED1.8k dataset |

LV dataset |

Code

Use of this software is granted for academic and research purposes. No commercial use is allowed. Please cite the appropriate publication.

- J. Liao, A. Kot, T. Guha, and V. Sanchez, "Attention Selective Network for Face Synthesis and Pose-invariant Face Recognition," to appear in IEEE International Conference on Image Processing (ICIP), Abu Dhabi, United Arab Emirates, October 2020

📨 Code available upon request - O. Styles, T. Guha, V. Sanchez, and Alex Kot, "Multi-Camera Trajectory Forecasting: Pedestrian Trajectory Prediction in a Network of Cameras," in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pp. 1016-1017, Seattle, USA, June 2020

📦 Code available here - S. Singh, V. Sanchez, and T. Guha, "Ensemble Network for Ranking Images based on Visual Appeal," in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 4507-4511, Barcelona, Spain, May 2020

📦 Code available here - O. Styles, T. Guha, and V. Sanchez, "Multiple Object Forecasting: Predicting Future Object Locations in Diverse Environments," in Winter Conference on Applications of Computer Vision, pp. 690-699, Colorado, USA, March 2020

📦 Code available here - A. Kumar, T. Guha, and P. K. Ghosh, "Dirichlet Latent Variable Model: A Dynamic Model based on Dirichlet Prior for Audio Processing," IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 27, no. 5, pp. 919-931, March 2019

📦 Code available here - O. Styles, A. Ross, and V. Sanchez, "Forecasting Pedestrian Trajectory with Machine-Annotated Training Data," in IEEE Intelligent Vehicles Symposium, pp. 716-721, Paris, France, June 2019

📦 Code available here

- V. Sanchez, and M. Hernandez-Cabronero, "Graph-based Rate Control in Pathology Imaging with Lossless Region of Interest Coding," IEEE Transactions on Medical Imaging, vol. 37, no. 10, pp. 2211-2223, October 2018

📨 Code available upon request - R. Leyva, V. Sanchez, and C.-T. Li, "Video Anomaly Detection with Compact Feature Sets for Online Performance," IEEE Transactions on Image Processing, 2017, vol. 26, no. 7, pp. 3463-3478, July 2017

📨 Code available upon request - A. Khadidos, V. Sanchez, and C.-T. Li, "Weighted Level Set Evolution Based on Local Edge Features for Medical Image Segmentation," IEEE Transactions on Image Processing, vol. 26, no. 4, pp. 1979-1991, April 2017

📦 Code available here

- J. Portillo-Portillo, R. Leyva, V. Sanchez, G. Sanchez-Perez, H. Perez-Meana, J. Olivares-Mercado, K. Toscano-Medina, and M. Nakano-Miyatake, "Cross View Gait Recognition Using Joint-Direct Linear Discriminant Analysis," Sensors, vol. 17, no. 1, p. 6, December 2016

📦 Code available here

- R. Leyva, V. Sanchez, and C.-T. Li, "A Fast Binary Pair-Based Video Descriptor for Action Recognition," in IEEE International Conference on Image Processing (ICIP), pp. 4185-4189, Phoenix, Arizona, USA, September 2016

📨 Code available upon request - R. Leyva, V. Sanchez, C. T.-Li, "Fast Binary-Based Video Descriptors for Action Recognition," in International Conference on Digital Image Computing: Techniques and Applications (DICTA), pp. 1-8, Gold Coast, Australia, December 2016

📨 Code available upon request

Data

Warwick-NTU Multi-camera Forecasting (WNMF) dataset

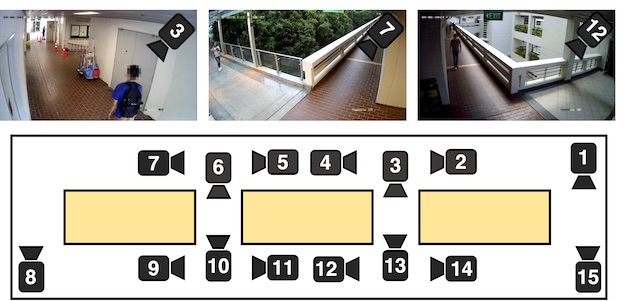

O. Styles, T. Guha, V. Sanchez, and Alex Kot, "Multi-Camera Trajectory Forecasting: Pedestrian Trajectory Prediction in a Network of Cameras," in IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pp. 1016-1017, Seattle, USA, June 2020

The WNMF dataset contains footage of individuals traversing the Nanyang Technological University (NTU) campus. The dataset contains cross-camera tracking information, such that future trajectories of individuals can be anticipated across multiple camera views. The data was collected over a span of 20 days using 15 different CCTV cameras. This was not a controlled collection (i.e., it was collected in real-world conditions).

Sample videos and topology of the camera networks used to create the WNMF dataset

Sample videos and topology of the camera networks used to create the WNMF dataset

Raw data statistics:

We release camera entrance and departure videos, pre-computed tracking information and RE-ID features. Faces are blurred using the RetinaFace algorithm [1] to detect faces. Provided are the 4 seconds proceeding a camera departure event and 12 seconds following a camera entrance event, totally 16 seconds for each verified cross-camera match.

|

Hours of footage |

600 |

|

Number of cameras |

15 |

|

Collection period |

20 days |

|

Time period |

8:30am – 7:30pm |

|

Video resolution |

1920 by 1080 |

|

Frames per second |

5 |

|

Cross camera matches |

13.2K |

Released data statistics:

|

Hours of footage |

10.2 |

|

Cross-camera matches after verification |

2.3K |

|

Mean cross-camera RE-IDs per track |

2.08 |

Request Dataset:

Please complete the release agreement found here 📝

[1] Deng, J., Guo, J., Zhou, Y., Yu, J., Kotsia, I. and Zafeiriou, S., 2019. Retinaface: Single-stage dense face localisation in the wild. arXiv preprint arXiv:1905.00641

CityWalks dataset

O. Styles, T. Guha, and V. Sanchez, "Multiple Object Forecasting: Predicting Future Object Locations in Diverse Environments," in Winter Conference on Applications of Computer Vision, pp. 690-699, Colorado, USA, March 2020

We introduce the problem of multiple object forecasting (MOF). MOF follows the same formulation as the popular multiple object tracking (MOT) task, but rather is concerned with predicting future object bounding boxes and tracks in upcoming video frames, rather than the bounding boxes and tracks in the current frame.

Multiple object forecasting

Multiple object forecasting

We have constructed the CityWalks dataset to train and evaluate models for MOF. CityWalks contains a total of 501 20-second video clips of which 358 contain at least one valid pedestrian trajectory. We extract footage from the online video-sharing site YouTube. Each original video consists of first-person footage recorded using an Osmo Pocket camera with gimbal stabilizer held by a pedestrian walking in one of the many environments for between 50 and 100 minutes. Videos are recorded in a variety of weather conditions, as well as both indoor and outdoor scenes. Dataset statistics are shown in Table 3. The dataset is available here 📦

Example frames from the CityWalks dataset

|

Video clips |

358 |

|

Resolution |

1280 × 720 |

|

Framerate |

30hz |

|

Clip length |

20 seconds |

|

Unique pedestrian tracks |

3623 |

|

Unique cities |

21 |

|

Object Detectors |

YOLO & Mask-RCNN |

IMAC: Image-music emotion dataset

G. Verma, E. G. Dhekane and T. Guha, "Learning Affective Correspondence between Music and Image," in IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), pp. 3975-3979, Brighton, UK, May 2019

To facilitate the study of cross-modal emotion analysis, we constructed a large scale database, which we call the Image-Music Affective Correspondence (IMAC) database. It consists of more than 85,000 images and 3,812 songs (approximately 270 hours of audio). Each data sample is labelled with one of the three emotions: positive, neutral and negative. The IMAC database is constructed by combining an existing image emotion database (You et al., 2016) with a new music emotion database curated by us (Verma et al., 2019). The dataset is available here 📦

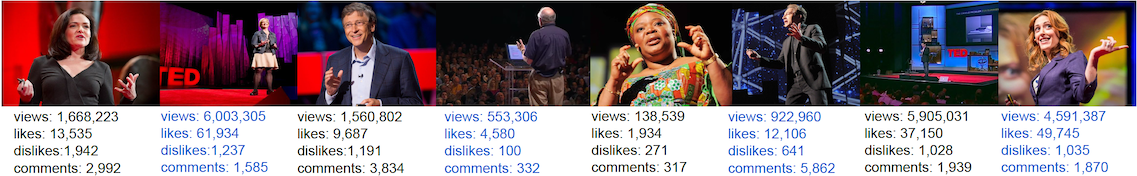

TED1.8k: TED talks popularity prediction dataset with YouTube metadata

R. Sharma, T. Guha and G. Sharma, "Multichannel Attention Network for Analyzing Visual Behavior in Public Speaking," in 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), pp. 476-484, Lake Tahoe, NV, 2018

To facilitate the study of public speaking behaviour, we construct a large video database, namely the TED1.8K database. This database contains 1864 TED talk videos and their associated metadata collected from YouTube. The YouTube metadata collected for each video are the number of likes, dislikes, views and comments. Table 1 presents a summary of the database.

|

Total videos |

1864 |

|

Average duration |

13.7 min |

|

YouTube metadata (mean, range) |

|

|

Views |

247K, (40 - 10264K) |

|

Likes |

3075, (0 - 113K) |

|

Dislikes |

174, (0 - 5750) |

|

Comments |

462, (0 - 26K) |

Sample frames from the TED1.8K database, which demonstrates the huge variability, and the challenging nature of the database

Sample frames from the TED1.8K database, which demonstrates the huge variability, and the challenging nature of the database

The TED1.8K dataset provides certain advantages for studying human behaviour in public speaking. Firstly, the video content is carefully created to have well defined audiovisual structure, with subtitles and transcripts of the talks. Therefore, the database offers opportunities for rich multimodal studies. Secondly, the TED talks are of diverse topics, and popular worldwide. Hence, the YouTube ratings are expected to come from viewers with varied demographics, age group, and social background, making the ratings rich and reliable. The dataset is available here 📦

Live Videos (LV) dataset

R. Leyva, V. Sanchez, and C.-T. Li, "The LV Dataset: a Realistic Surveillance Video Dataset for Abnormal Event Detection," in 5th International Workshop on Biometrics and Forensics (IWBF2017), pp. 1-6, Coventry, UK, April 2017

The LV dataset comprises video sequences characterized by the following aspects:

1. Realistic events without actors performing predefined scripts with a diverse subject interaction.

2. Highly unpredictable abnormal events in different scenes, some of them of very short duration.

3. Scenario correspondence, where the training and test data are captured from the same scene.

4. Challenging environmental conditions.

The LV dataset consists of 30 sequences with 14 different abnormal events. The main characteristics of this dataset are given in Table 4.

|

Duration

|

3.93 hours

|

|

Frame Rate

|

7.5 - 30 FPS

|

|

Resolution

|

minimum: QCIF (176 144)

maximum: HDTV 720 (1280 720) |

|

Format

|

MP4 video in H.264

|

|

No. of videos/scenes

|

30/30

|

|

Anomalous Frames

|

68989

|

|

Events of Interest

|

34

|

|

Abnormal events

|

Fighting, people clashing, arm robberies, thefts, car accidents, hit and runs, fires, panic, vandalism, kidnapping, homicide, cars in the wrong-way, people falling, loitering, prohibited u-turns and trespassing

|

|

Scenarios

|

Outdoors/indoors, streets, highways, traffic intersections and public areas

|

|

Crowd density

|

No subjects to very crowded scenes

|

All sequences in the LV dataset contain both abnormal and normal behaviour. The labelled frames are provided along with the dataset. The regions of interest (ROIs) are provided in a separate sequence of the same length as that of the training/testing sequence. A separate file is provided with the timestamps to determine the frames to be used for training and those for each sequence. The dataset is available here 📦