By Stefano Caria

Randomised control trials (RCTs) are one of the most widely used methods for policy evaluation. However, RCTs have recently been criticised for sacrificing the welfare of study participants in order to produce scientific knowledge. We propose a new treatment-assignment algorithm that balances these different objectives and show how it can be implemented in the context of the Syrian refugee crisis.

Conventional RCTs are designed to learn about the impact of one or more policies in a transparent and precise way. In the standard protocol, the designer randomly assigns fixed shares of study participants to a treatment and a control group. Individuals in the treatment group are offered the policy, while individuals in the control group are not (or are only offered a weaker version of the policy). If the designer is interested in more than one policy, they will offer each policy to a fixed proportion of people in the study.

This procedure is ideal for learning about policy effectiveness, since it enables researchers to precisely compare the outcomes of a group of people that receives the policy and an equivalent group of people that does not. However, it is not designed to improve as much as possible the welfare of the people that take part in the experiment. For example, it fails to use the information on the effectiveness of different treatments that may become available over the course of the study.

Participant welfare is at the heart of current debate on the ethics of RCTs. As the scale, scope, and stakes of experiments grow – with RCTs being used to tackle some of the thorniest social issues, from COVID-19 containment to climate change mitigation (Muralidharan and Niehaus, 2017; Haushofer and Metcalf, 2020) – and as more and more people worldwide take part in experiments, neglecting participant welfare has become very hard to justify.

A new approach: The Tempered Thompson Algorithm

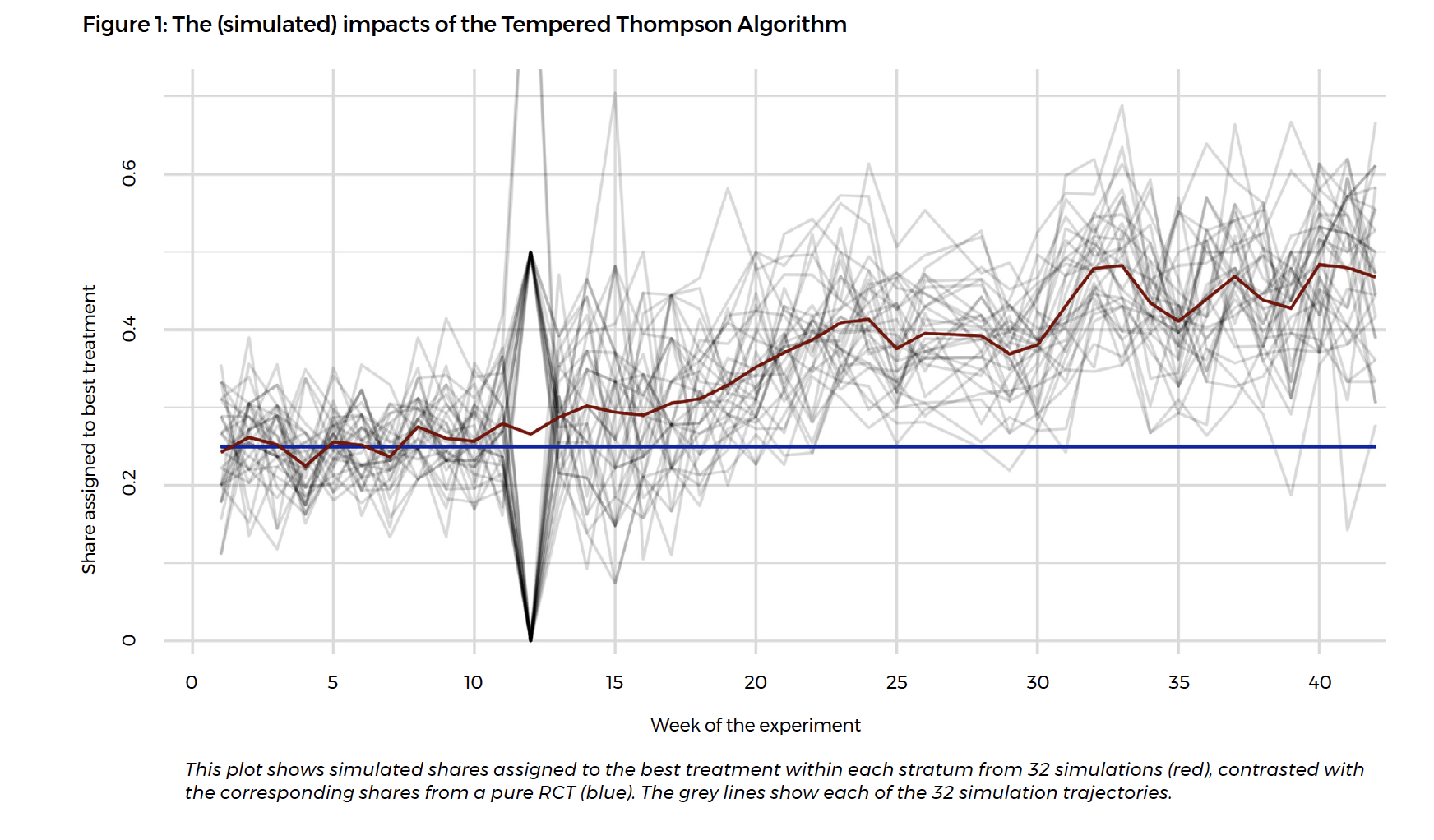

In our paper (Caria et al., 2021), we propose a treatment-assignment algorithm that allows researchers to balance the objectives of learning about treatment effectiveness and maximising participant welfare. The algorithm generalises two well-known treatment assignment protocols. First, the Thompson (1933) protocol, whereby one assigns increasing shares of individuals to treatments that prove to be effective. Second, the conventional RCT protocol, where assignment shares are fixed. This guarantees that, over time, more people are assigned to promising treatments, while no treatment is ever dropped out from the experiment.

Our algorithm has two key features. First, it is adaptive. This means that, over time, it changes treatment assignment probabilities for new participants by using information on the effectiveness of different treatments on the existing participants. New participants are therefore more likely to be assigned to a treatment that will benefit them. Second, it is targeted, in the sense that it recognises that different groups of people may benefit differently from the same intervention. It uses group-specific information about treatment effectiveness to help assign participants to treatments most likely to improve their welfare.

Testing the algorithm: Employment policies for Syrian refugees in Jordan

We implement this methodology in a field experiment designed to help Syrian refugees in Jordan find employment. Jordan has been at the forefront of the Syrian refugee crisis and, since the start of the conflict, has received close to 700,000 refugees: one tenth of its original population (UNHCR, 2020).

Jordan has also recently implemented a bold set of reforms, known as the Jordan Compact, that substantially expanded access to formal employment for refugees. Legal restrictions to refugee employment are common in both developed and developing countries. If successful, the Jordan Compact could set an example for similar policy reforms around the world.

In collaboration with the International Rescue Committee, a non-governmental organisation, we test whether Syrian refugees need additional support in order to make the most of the new employment opportunities that have opened up for them. In particular, we study the impacts of offering (i) a small, unconditional cash transfer; (ii) information provision to increase the ability to signal skills to employers; and (iii) a behavioural nudge to strengthen job-search motivation. These interventions tackle a number of job-search barriers that have been identified in the previous literature: the cost of job search, the inability to show one’s skills to employers, and the need to keep a strong motivation. We use the Tempered Thompson Algorithm to simultaneously learn about the impacts of these policies and maximise the employment rates of the refugees who take part in our study, as measured in our first follow-up interview, six weeks after treatment.

We find that the cash intervention has large and significant impacts on refugee employment and earnings, two and four months after treatment. While employment rates remain stubbornly low in the control group, the cash grant raises employment by 70% and earnings by 65%. These are sizable impacts compared to those documented in recent literature on active labour market policies and are larger than the impacts of the other two interventions, which are not significantly different from zero. However, impacts six weeks after treatment are close to zero for all interventions.

We also quantify the welfare gains from using our algorithm. First, we find that targeting increases employment rates by about 20%. However, since six-week employment effects are close to zero, there are almost no gains from the adaptive part of the algorithm. Second, we show that, if we had set two-month employment as the objective, a measure that responded strongly to the cash intervention, the algorithm would have doubled the employment gains of a standard RCT.

Our results show that the Tempered Thompson Algorithm can successfully measure policy effectiveness whilst also generating substantial welfare gains. However, choosing the right objective is key. The results also show that lifting legal barriers may not be sufficient to fully boost refugee employment in Jordan if this does not come with some additional financial support to help refugees search for employment.

About the author

Stefano Caria is Associate Professor of Economics at the University of Warwick and a CAGE Associate

Publication details

This article is based on Caria, S., Gordon, G., Kasy, M., Quinn, S., Shami, S. and Teytelboym, A. (2021). An Adaptive Targeted Field Experiment: Job Search Assistance for Refugees in Jordan. CAGE Working Papers (No. 547)

Further reading

Duflo, E. and Banerjee A. (2017). Handbook of Field Experiments. Elsevier.

Muralidharan, K. and Niehaus, P. (2017). Experimentation at Scale. Journal of Economic Perspectives 31(4), 103–24.

Haushofer, J. and Metcalf, C. J. E. (2020). Which Interventions Work Best in a Pandemic? Science 368(6495), 1063–1065

Thompson, W. R. (1933). On the Likelihood that One Unknown Probability Exceeds Another in View of the Evidence of Two Samples. Biometrika 25(3/4), 285–294

UNHCR (2020, March). Jordan: Statistics for Registered Syrians in Jordan https://data2.unhcr.org/en/documents/details/75098