Motion Magnification

Microscopes, through amplifying the image of small objects, allow us to view the world of tiny things the human eye cannot see. They led to some of the greatest scientific breakthroughs in history – for example cellular biology, forensic science, existence of bacteria + virus’ etc. Is it possible to do the same thing but to small spatial changes over time in a video?

Yes!

The clever folks at MIT Computer Science lab had the thought that, since computers can measure very small changes in amplitude, they could amplify these changes. One such small change is the colour of a person's face, since when your heart pumps and fresh blood circulates, the colour of your face turns more red. This video is of one of the researchers' faces from MIT, with no processing applied.

This change in redness is imperceptible to the human eye, but is detectable when analysed on a computer. So an algorithm was devised that takes a video (shot by a CCD camera), and for each pixel measures the small variations in colour over time. By amplifying these small changes by say x100, then adding back to the original video, one can see that reddening of the face! You can see the result below.

Remarkable! This algorithm can be used to measure a person's pulse remotely which could be incredibly useful in medical contexts, for example monitoring neo-natal infants.

Colour change is not the only detectable change in a pixels amplitude, however. Motion will also affect a pixel's amplitude over time, moreover many pixels will change coherently as an object moves in and out of view. So can we use a similar approach to colour magnification, detecting these changes and amplifying them?

One approach to do this is to explicitly calculate the motion of objects between frames, and amplify. These are known as "Lagrangian" techniques, in reference to fluid dynamics where the trajectory of particles is tracked over time. Processing like this requires a lot of clever but complicated procedures such as image segmentation and in-painting, which is not only costly but also needs careful testing to avoid artefacts. For more information see this paper and here.

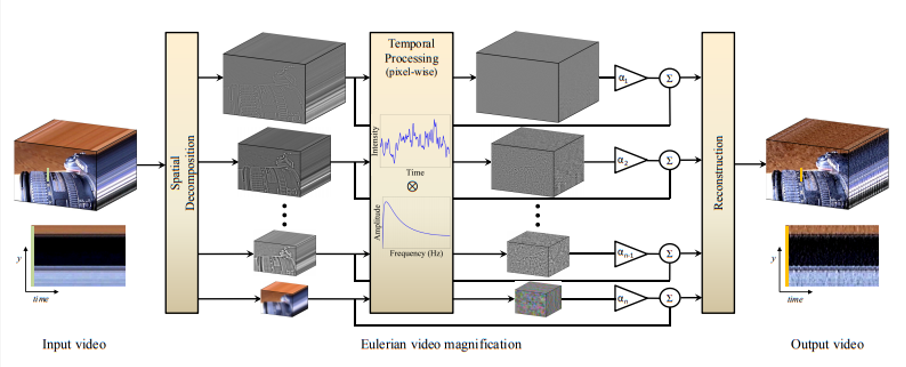

There is another approach; instead of explicitly estimating the velocity field, one exaggerates the motions at fixed positions. These "Eulerian" techniques make use of a helpful transformation of the data in some way, so the general process is something like 1) decompose frames into alternative representation, 2) manipulate these frames to exaggerate motion, 3) reconstruct/transform back. The technique I use is of this form, using a dual tree complex wavelet transform (DTWT). Though there are a few variations of Eulerian techniques, they all separate the data into different spatial scales (multiscale) before applying some temporal processing.

Using an Eulerian approach, one can do 'linear' processing or 'phase-based' processing. In the linear case we spatially decompose the images, apply a temporal bandpass filter which can be shown to boost the linear term of a Taylor series expansion of the displacement function, and reconstruct. This is depicted in the schematic above, taken from Michael Rubenstein's thesis which does an excellent job of explaining motion magnification. Note 'linear' motion magnification suffers from amplifying the noise, and can only be magnified by small factors.

To avoid these limitations, one can use phase-based processing - which uses a "pyramid scheme" to perform the spatial decomposition into some complex domain. Then instead of temporally filtering, one simply tweaks the phases and reconstructs. To understand the link to displacement, make an analogy with Fourier: just as the phase variations of Fourier basis sinusoids are related to translation via the Fourier shift theorem, the phase variations within the complex pyramid correspond to local motions in those particular spatial subbands of an image. Notice in particular that white noise will be translated not amplified. You can read more details in this paper, and lots of information on Eulerian methods may be found here and links therein. A Python implementation on this technique may be found here. One well-renowned pyramid scheme is the Riesz pyramid, which is quite an efficient representation of the data and fast to implement.

Below are some example videos that demonstrate this process in action (more videos found here). Notice how each video has a frequency range (often depicted at the bottom), which is set by the person processing. One can use this to draw out certain phenomena, such as the human pulse or camera shutter speed or even the different modes on a drum (last video)! Also since we can apply phase shifts in both directions, one can use this technique to attenuate small motions, such as the video shown with the shuttle below!

The technique I use is a version of the phase-based motion magnification, using as its transform the Dual Tree Complex Wavelet, DTWT. This transform uses a mother wavelet to decompose the image spatially into different high pass scales + a low pass residual (all complex), going through 6 different rotations of the wavelet to span the 2D space. DT

WT is aimed to amplify rather broadband motions, rather than specifying the narrow(ish) frequency range like above, allowing us to address multi-modal and non-stationary oscillations (the area of my research!). Perfect reconstruction, good shift invariance, and computational efficiency are all important aspects of DT

WT. Detail on this technique and its application to solar coronal physics is found in this paper by Sergey Anfinogentov, who did a post-doc here at Warwick. He also owns the github sharing the code behind DT

WT motion magnification, which is implemented in Python & IDL.

You may see the outline of how I use this technique to search for 'tiny' decay-less oscillations in the Sun's atmosphere over on My Research page.

These motion magnification techniques work well and are already being used in diverse fields, such as diagnostics of the brain (see this paper which uses motion magnification to better see the Chiara I malformation), fusion plasma physics at MAST (paper), structural engineering (paper, paper) & fluid dynamics (paper). Some of the relevant videos are below. If you hear of another use for these processing techniques, please let me know!

Some outline of the maths behind the visual microphone found here.

Also pregnant lady magnified here.

Helpful links

- To apply the motion magnification algorithm I use, please refer to github.com/Sergey-Anfinogentov/motion_magnification This is based on a DT

WT (see this paper) and is implemented in Python or IDL.

- A matlab implementation of the phase-based video magnification is github.com/rgov/vidmag. Other codes spearheaded by CSAIL may be found at: people.csail.mit.edu/mrub/evm/ . These are implemented in Matlab (with executables for Windows, Linux & Mac). More information found here. A python implementation is being worked on at github.com/brycedrennan/eulerian-magnification

- Reisz-pyramid based processing found here,

- For a more technical grounding in these techniques, see Micheal Rubinstein's excellent thesis from 2014.

- Here are some TED talks about these techniques and some applications: the motion microscope, the visual microphone.

Here is an unprocessed video of a small child sleeping. Can you make out its breathing?

Below is the video processed using linear Eulerian motion magnification. Its breathing is far more apparent!