WMG Insights

Video camera compression for machine learning perception in assisted and automated driving

Harry Chan Project Engineer - Automotive Camera Data Smart, Professor Valentina Donzella Head of IV Sensors

One of the biggest challenges associated with sensing in assisted and automated driving is the sheer amount of data produced by the environmental perception sensor suite. Specifically, higher levels of autonomy require numerous sensors and different sensor technologies.

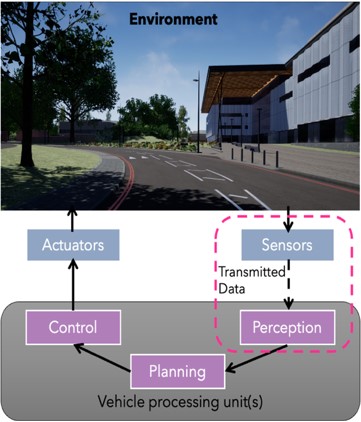

Fig 1. Sensor data pipeline in assisted and automated driving. We are studying how compression can affect the transmitted data [3].

Sensor data (as shown in Fig. 1) needs to be transmitted to the processing units with incredibly low latencies and without hindering the performance of perception algorithms, i.e., object detection, classification, segmentation, prediction, etc. However, the amount of data produced by a possible sensor suite of a vehicle capable of level 4 autonomy (as defined in SAE J3016) can add up to 40 Gb/s (including 6-12 cameras producing an estimate of 3Gbit/s each) and cannot be supported by traditional vehicle communication networks [1-3]. Therefore, robust techniques are needed to compress and reduce the data that each sensor needs to transmit to the processing units; however, higher degrees of compression can be achieved using lossy compression, meaning that the reconstructed camera frames might differ from the original frames and might present artefacts. The key difference between Level 3 and Level 4 automation is that Level 4 vehicles can intervene if things go wrong or there is a system failure.

The most commonly used compression standards and schemes have been optimised for human vision. Still, in the case of assisted and automated driving functions, the consumer of the data will be a perception algorithm based on well-established deep-neural networks. Some examples of compression artefacts are shown in Fig. 2.

Some of the latest research work at Warwick Manufacturing Group (WMG) within Intelligent Vehicles Sensors demonstrates that lossy compression of video camera data can be used in combination with deep neural network-based object detection (i.e. vehicle detection). Lossy compression is where some data is removed and discarded, reducing the overall amount of data and the file size.

Sensor data compression causes a degradation in the network performance only at extremely high compression ratios (higher than ~1:1200), and the neural network performance can improve remarkably when re-training with compressed data [4-5]. Other approaches include identifying areas less important in each one of the collected frames (e.g., sky, buildings, etc.) and compressing using higher compression ratios in these areas while using lower compression ratios for regions with cars, roads, and pedestrians. [6].

Fig.2. Examples of compression artefacts from [3], zooming in on some details (c-f). The pink rectangles are areas taken from the original frame, and the purple rectangles are extracted from the compressed frame.

Our work at WMG demonstrates the feasibility of applying compression at the edge of the perception sensor chips. It can inform strategic decisions around the best sensor fusion architecture for assisted and automated driving functions.

This ultimately will allow higher levels of autonomy to be achieved for less computing power and less cost.

If you are interested in learning more about WMG’s research into intelligent vehicle sensors and how we work with businesses, please get in touch with wmgbusiness@warwick.ac.uk

References

[1] SAE J3016 202104, “Taxonomy and Definitions for Terms Related to Driving Automation Systems for On-Road Motor Vehicles,” Society of Automotive Engineers, Warrendale (PA), USA, Standard, 2021.

[2] Heinrich S, Motors L. Flash memory in the emerging age of autonomy. Flash Memory Summit. 2017 Aug:1-0.

[3] Chan PH, Souvalioti G, Huggett A, Kirsch G, Donzella V. The data conundrum: compression of automotive imaging data and deep neural network-based perception. In London Imaging Meeting 2021 Sep 20 (Vol. 2021, No. 1, pp. 78-82). Society for Imaging Science and Technology.

[4] Chan PH, Huggett A, Souvalioti G, Jennings P, Donzella V. Influence of AVC and HEVC compression on detection of vehicles through Faster R-CNN.

[5] Donzella V, Chan PH, Huggett A. Optimising Faster R-CNN training to enable video camera compression for assisted and automated driving systems, 2022 RAAI

[6] Wang Y, Chan PH, Donzella V. A Two-stage H. 264 based Video Compression Method for Automotive Cameras. In2022 IEEE 5th International Conference on Industrial Cyber-Physical Systems (ICPS) 2022 May 24 (pp. 01-06). IEEE.