Generative Artificial Intelligence Policy - for ADC Assessments

Purpose of ADC's GenAI policy

The purpose of this policy is to establish ground rules for the use of generative AI tools in completing assessments and critical incident questionnaires on credit-bearing and/or Advance HE accredited courses provided by the Academic Development Centre. The intention behind the policy is to encourage selective use of these tools in ways that positively impact reflective and scholarly teaching practices and learning on our courses. It is also aimed at discouraging usage that may reduce or diminish learning opportunities, or that conflicts with principles of academic integrity or university policy.

Context

This policy applies to programmes within the Academic Development Centre’s, including credit-bearing courses, non-credit-bearing courses, and courses accredited by Advance HE.

The purpose of this policy is to establish ground rules for the use of generative AI tools in completing assessed work in ADC on credit-bearing and/or Advance HE accredited courses.

Warwick's Institutional Approach to AI use

If you would like a clear explanation about what Generative Artificial Intelligence Tools (GAITs) are and Warwick's approach to the use of AI, particularly in relation to academic integrity see: Institutional Approach to the use of Artificial Intelligence and Academic Integrity.

The policy - specifically for ADC Assessments

1. Standard: work that you submit for any assessment must not be the substantively unaltered output of a generative artificial intelligence tool.

2. Declaration: you will be required to assent to a declaration whenever you submit a piece of work for summative assessment on an ADC programme, to the effect that your use of generative AI tools is in keeping with the policy.

3. Disclosure: in line with the University’s guidance on GAITs, you will also be asked to disclose how, if at all, you have used these tools in preparing your submission. Where a GAIT has been used, participants should, after their Bibliography or Works Cited section, provide an overview of the following:

- which GAITs have been used;

- why GAITs have been used;

- where a GAIT has been used;

- where a GAIT’s output has been modified before use.

There will be a character limit for this usage disclosure, of approximately 75 words per assessment item.

4. Consultation: if you have questions or are in any doubt about whether a particular use of Generative AI would constitute a breach of this policy or the university’s regulations on AI or academic misconduct, you are encouraged to discuss it with your programme leader, whose contact details are available in your course handbook.

5. Alignment and Updates: this policy is in alignment with the University’s position on generative AI tools as set out in the Institutional Approach to the use of Artificial Intelligence and Academic Integrity document. This policy was put into effect in February 2024, and last updated in April 2024.

University of Warwick’s stance on AI and academic integrity

The institutional approach taken by Warwick is that LLMs can be used to assist students in demonstrating their own criticality and drawing comparisons, as well as providing information that helps students engage with the literature and the sources. It should not therefore replace your own criticality or theorisation, nor supersede engagement with core and recommended reading lists. It should be used in an assistive capacity, not a compositional one.The University’s policy then offers examples of how LLMs might be of assistance:

- Lateral thinking;

- Alternative thought streams;

- Supplementary and complementary thinking;

- Data, charts, and images;

- Getting starter explanations; a note of caution that you must fact check this information;

- Keep interrogating AI on the questions to create own novel insights;

- For formative learning;

- For fun;

- A revision tool to generate practice questions;

- Structuring plans and line of reasoning;

- Refining your work;

- Supporting language skills.

The same policy makes it clear that LLMs should not be used replace educative process or supplant the autonomy of students:

- Replacing learning. Value your stream of consciousness and being sentient;

- Gaining an unfair advantage. This is academic misconduct;

- Creating content for your work which you present as your own work. This is plagiarism;

- Synthesising information. You will not be able to demonstrate your work and thinking as opposed to that artificially generated;

- Rewriting your work or translation. It is important that you develop your own distinctive academic voice. Markers would much rather read imperfect English that is your voice than perfect English written by another human or AI. See also the Proofreading policy.

Criticality, detail and reflectivity in ADC assessments

ADC assessments involve critically reflecting on your teaching methods. On some of our course, you'll need to align your practice with the Professional Standards Framework (PSF). You must explain how your decision-making incorporates key pedagogical concepts and theories. Demonstrating care for students, aligning with personal, institutional, and broader values is essential. Your teaching approaches and decisions should be informed by subject-specific knowledge and educational research. General or abstract teaching discussions are insufficient; you must detail your experiences and justify your choices.As you can see, much of the content that is crucially important to success in our assessments is rooted in the teaching practice and detailed reflections of our course participants. This is why LLMs should only be used in a limited, assistive capacity on ADC courses. Anything that you submit as part of your formative or summative assessment should (1) Draw on authentic, detailed examples of your own teaching practice; (2) Represent your own reasoning about the aspect of your teaching practice in question; (3) Reflect your own rigorous and meaningful engagement with relevant pedagogical literature, educational data and institutional or sector wide policies and expectations.Record keeping advice for interactions with AI tools

The declaration will also remind course participants that they: “are advised to keep good records of their interactions with any AI regarding all their submissions in case they are later required as part of any further assessment, investigation or similar.”Disclosure about AI use

Whenever you submit work for assessment by ADC, you are required to provide an account of your use of GAITs, including which tools you have used, how and why you have used them, and how you have changed/adapted/developed the outputs of GAITs before submission.

Examples:

“Used Chat GPT 3.5 for ideas generation: list of areas of practice to reflect on [include list of practice generated]. Used Google Gemini to locate DOIs for articles and convert citation list to APA format.”

“Used Claude AI to support reflective practice, identify areas for exploration[include details of material generated by Claude]. Used Copilot to identify repetition in essay - I edited essay to remove duplication, used Copilot to check essay again for flow.”

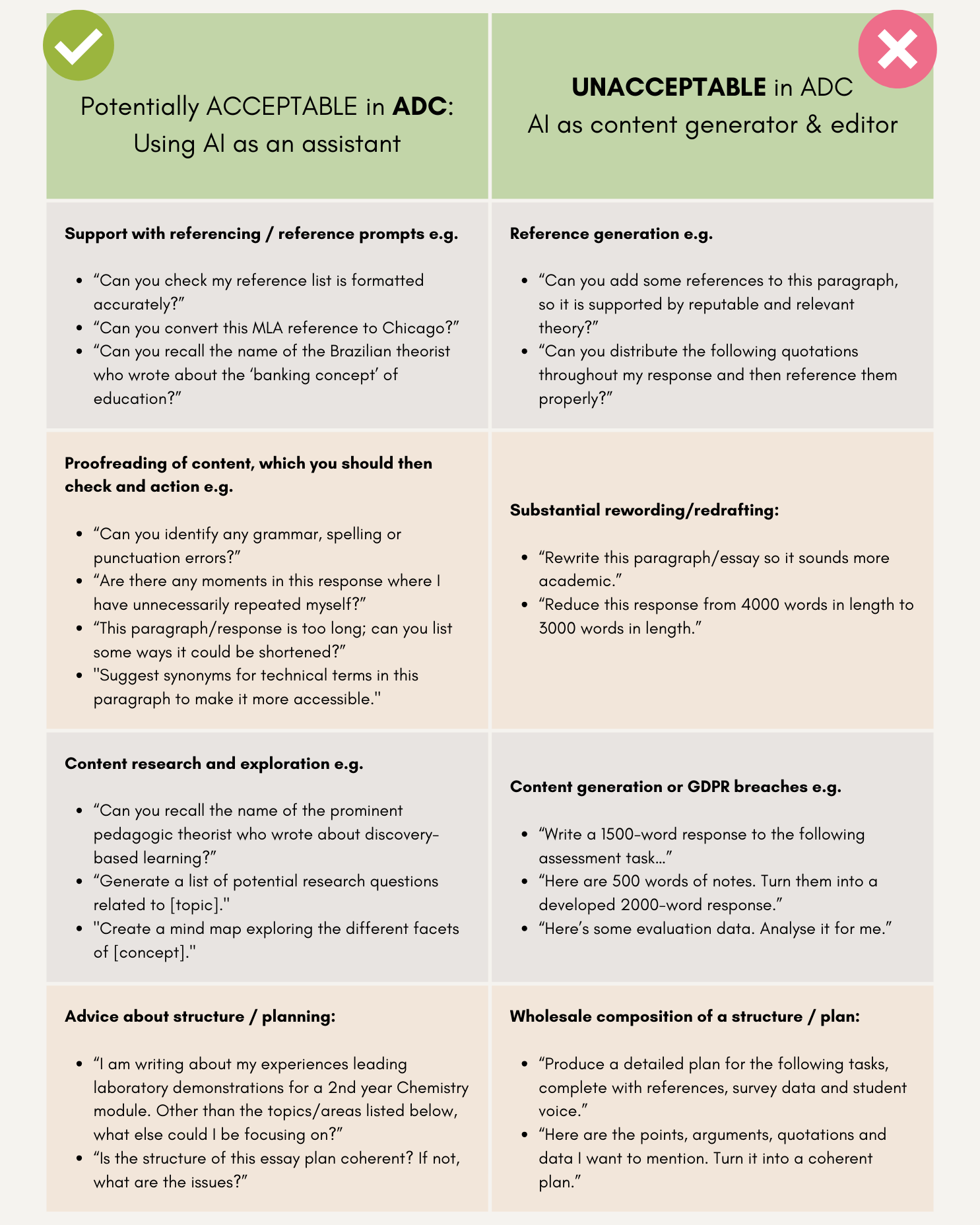

Guidance on using Gen AI - some examples of permitted use

Interactions with GAITs are triggered by commands, determined by the user. In the following table, ADC has attempted to offer some illustrative examples of the sorts of commands that would be potentially reasonable vs. those that would be totally unreasonable. These examples only apply to ADC programmes, not the wider university.