B-Sci Insights

3 Powerful Applications & Insights

Most marketers claim to have at least a passing awareness of behavioural science. However, their familiarity often begins (and ends) with the concept of "nudging" — or perhaps a handful of heuristics (such as Loss Aversion or Present Bias) that they've employed in a marketing campaign. This is unfortunate, as it dramatically undersells the scope and depth of the field — and its potential to transform marketing. It also leads some marketers to mistakenly think that they already "know" behavioural science, when they are only scratching the surface of its potential.

With this missed opportunity in mind, we'd like to share three important applications of the field, drawn from our collective experience at the intersection of marketing, consumer insights and applied behavioural science.

Re-Thinking Customer Segmentation

Historically, consumer marketing has been deeply rooted in demographics (such as age, gender, race, family composition, and income), often because this was most readily available information (and most feasible way to target advertising efforts). Of course, the field has progressed and become more sophisticated over the years, influenced by both psychographic profiling and the massive influx of behavioural data now available (on viewing habits, clickstreams, purchase patterns, etc.). However, it's important to remember that these approaches also have their limitations:

- Psychographics are typically driven by claimed attitudes, opinions and preferences — and unfortunately, people's intentions don't always translate consistently into their actions.

- Data typically tells us what people are doing, but not necessarily the "why" behind these actions, nor what they might do in new/different scenarios.

Behavioural science complements these approaches, by encouraging us to view and segment customers through the barriers that are impeding their actions (signing up for a newsletter, joining a loyalty program, planning for their retirement, etc.). It leads us to ask these questions:

- Do potential customers lack awareness and/or intent to purchase?

Marketers often make this assumption — and therefore focus almost entirely on claims or offers to create interest. However, this is often not enough to drive action, particularly when customers are inundated with marketing messages — and thus likely to default to familiar habits and purchase patterns.

- Do they have intent, but are held back by lack of resources, opportunity — or perhaps a particular concern?

This information is vital in prioritizing and targeting marketing efforts, as there's no need to waste valuable time on those with "hard" structural barriers to action. And among those who are actual prospects, we've found that the answer often lies in addressing a very specific barrier, perhaps by answering a lingering question or providing an important reassurance.

- Or do they simply fall into familiar habits?

This is the acknowledged "sweet spot" for Nudges, or interventions to help people overcome inertia and adopt new behaviours. Fortunately, there are often a sizable percentage of customers who fall into this category and need to be helped/guided, rather than convinced.

In our experience, we've found this way of segmenting and approaching customers to be quite valuable and actionable. It's helped our clients to prioritise and focus energy towards customer segments with "softer" barriers to action and to customise messaging (to overcome their specific hurdles). For example, we recently worked with a leading Irish bank, which had been segmenting its customers primarily by demographics and net worth. Yet when we approached the same customers with a behavioural lens, we uncovered distinct cohorts with similar patterns (in terms of financial products owned, reliance on banking staff, comfort with self-directed transactions in app or online, risk profile, etc.). Interestingly, these behavioural groupings cut across key demographics and account size. They also proved far more actionable, as we then worked with the bank to develop targeted product propositions and strategies to help each segment overcome its specific barriers to action — and to ultimately improve savings and investing behaviours.

For further information and perspective on behaviourally-informed segmentation, we recommend:

- This article on behavioral segmentation by Dilip Soman and Kayln Kwan of Behavioral Economics in Action at Rotman (BEAR)

- The COM-B model, which provides a more structured approach for exploring barriers to action

Focusing on Behaviour Change & Habit Format

Consumer marketing spend has historically been weighted towards the top of the purchase funnel. The implicit assumption was that if people weren't acting as we'd like, it's because they weren't aware of our offer — or perhaps they lacked proper motivation. This line of thinking led quite directly to enormous investments in advertising and increasingly dramatic offers (lower prices, new features, etc.), both of which are often quite expensive and wasteful. Of course, the revolution in digital marketing has increased the emphasis on direct response and conversion, yet the underlying thinking remains largely the same: If we can simply drive attention and engagement — and then try enough different messages or offers via A/B testing — conversion rates will eventually inch upward.

Behavioural science reminds us that intent does not consistently translate to action. Importantly, it reinforces that there is a true science to helping people overcome "intent to action gaps" and to adopt new behaviours. For marketers, the implications are clear: We can't simply congratulate ourselves on creating awareness (and assume that sales will follow). Instead, we need to focus further down the sales funnel, and actively help people convert their intentions into specific actions. These "Last Mile" efforts should be systematically integrated within marketing plans and design briefs, so that they become a consistent part of our thought process and toolkit. This will help marketers to think beyond "above the line" campaigns — and to focus needed energy and resources on efforts throughout the full consumer journey, including conversion and use.

Tied to this, more energy should be paid to the science of helping people form and retain their new purchase and consumption habits. For example, on a recent project to promote handwashing among children, we came up with the idea of using a small stamp, which could be washed off with soap or hand sanitizer after multiple washes per day. This became a playful classroom ritual, in which the childrens' hands were stamped in the morning linked to an existing routine of taking attendance — this provided an incremental way for teachers to monitor hand hygiene by seeing how much the stamp had disappeared throughout the day. And at the end of the day, the children would proudly show their parents or caregivers their clean hands (thus providing immediate positive reinforcement). This intervention, among others, was deployed with sanitising products in schools and helped to reduce Covid transmission by 14%. By engaging and gamifying the hygiene routines, children were more engaged and compliant.

Successful apps also typically leverage these behaviorally-informed strategies to promote habitual usage. For example, Duolingo drives habitual interaction by framing daily usage as 'streaks.' As you take your daily language lesson, the app congratulates you for maintaining your streak and celebrates longer streaks with confetti visuals. Conversely, if you haven't logged in recently, the Duolingo icon on your home screen will start to 'degrade' and look quite sad: A clever and salient reminder to log back in and start another streak.

For further information and perspective on behaviour change and instilling new habits, we recommend:

- The EAST framework, which provides a simple yet powerful approach to applying behavioural insights and driving behaviour change

- These articles that share insights from recent books by Professors Katy Milkman (How to Change) and Wendy Wood (Good Habits: Bad Habits)

Creating Peak Moments & Memorable Experiences

Behavioural Science also has much to teach us about how people process their experiences, given our inherent mental limitations (and continual effort to manage/limit our "cognitive load"). Perhaps the most digestible and actionable concept is the "Peak & End Rule," which was first articulated by the Nobel Prize winner Daniel Kahneman. It states that the most powerful and memorable aspects of an experience are its "peak" moments (i.e. the most intense moments) — and the very end. Of course, this simple concept has powerful implications for customer service and/or experiential marketing.

The most obvious takeaway is to focus upon the conclusion of a client experience (such as the check-out, invoicing or payment process), which can sometimes leave a lasting sour note in the customer's mind. Perhaps a more interesting implication is to devote more energy towards creating "peak" moments within the customer journey: While "doing the basics" and avoiding the negatives may be table stakes, it's disproportionately powerful to create truly outstanding (and thus memorable) interactions and experiences. These small "micro-moments" of delight ("peaks") tend to linger in people's minds (and ultimately lead to brand advocates and repeat customers).

Of course, luxury marketers (such as the perfume company, Penhaligon's) are skilled at creating multi-sensorial experiences of this nature, by making a production out of packing up your product upon purchase. The in-store staff wrap your purchase in tissue paper and spritz it with your preferred fragrance before placing it within the bag, along with samples of other fragrances. However, it's notable that mainstream brands (such as Doubletree Hotels or Metrobank) have also leveraged this principle in their own (modest) ways, through unexpected surprises or extras, such as warm cookies or pet treats.

These examples support a broader learning from the Behavioural Science literature (and our collective experience), which is that "peak" moments are highly correlated with doing or delivering the unexpected. And as it turns out, high-leverage situations (such as handling customer service inquiries, fielding special requests or complaints, etc.) are actually excellent opportunities to surprise and delight, because tensions are high and customer expectations are typically quite low. So "going the extra mile" at that moment will have a disproportionately high impact on customer memory and perception (and social media chatter). For example, you may have heard the (true) story of a 10-year-old girl who posted about losing her LG phone — and was soon surprised to receive a new LG Swift L3II from the company. An unexpected act that most likely made the company a lifelong customer and advocate.

For further information/insights on the Peak-and-End rule and creating memorable experiences, we'd recommend:

- This article further explaining the peak-and-end rule

- The book The Power of Moments from Chip and Dan Heath

Enhancing & Transforming the Marketing Function

Of course, these three ideas and applications — Segmentation, Habit Formation & Peak Moments — don't capture the full scope of this very diverse and dynamic field. Yet hopefully, they begin to illustrate the power of Behavioural Science to provide a powerful complementary lens to our marketing efforts.

As importantly, these applications illustrate that Behavioral Science has far more to offer than simply "nudging." By more thoroughly understanding how people process information, make decisions and even build memories, we can better focus our energy and resources (towards the most promising segments and specific behaviour changes), improve customer retention (by creating and reinforcing habits) and create more brand advocates (by creating memorable experiences through unexpected peak moments). And certainly, sustained progress in any one of these areas (prioritisation, retention and advocacy) is likely to impact the bottom line.

Scott Young is an independent advisor and educator, whose expertise and passion lies in helping leaders and organizations to apply Behavioural Science ethically and effectively. He can be reached at scott.young@bescy.org.

Ted Utoft is a journalist turned researcher with a passion for understanding behaviour in context. He is the UK CEO and global Chief Growth Officer for BVA Nudge Consulting. Ted has led research and consulting projects for private and public sector clients in over 25 countries. He can be reached at ted.utoft@bvanudgeconsulting.com

Improving Your Marketing Efforts with Behavioural Science was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

British tourists are being evacuated from wildfires on the continent, and meanwhile, our political leaders have been flirting with abandoning or watering down key climate policies. It's dispiriting, but having studied the behavioural science of climate policy for the last decade, I can't say I'm surprised.

Let's remind ourselves, in case our politicians have forgotten — voters want strong climate action. The Government's data show that 82% of Brits are concerned about climate change, and that's highest in London (45% 'very concerned' relative to 39% nationally). Only 9% oppose Net Zero, and in fact a majority think we should get there before 2050. This isn't a fad — support has been consistently high going back through multiple Prime Ministers, a cost of living crisis, Covid-19, Brexit, and beyond.

And why not? The truth that Net Zero is a 'win-win' feels more relevant than ever. Cheaper bills, greater energy security, warmer homes, cleaner air, new jobs, the economic growth opportunity of the 21st Century, and we'll save the planet in the process. These things win votes. Backtracking would be a disastrous over-correction.

And yet the rhetoric around London's ULEZ expansion has highlighted the real challenges we face when it comes to delivery. I'm sure the residents of Uxbridge want to breathe cleaner air, but, like the rest of us, they may be less keen to stump up cash for an electric car, or walk their kids to school.

And there's more of this to come. 62% of the emissions cuts we need depend on 'behaviour change' — mainly the adoptions of green tech like zero-emission cars and heatpumps, but also some modest lifestyle change around walking and cycling, diet, and aviation. Even the other 38% will depend on hard-won public agreement, for transmission infrastructure and wind turbines, for example. It's all behavioural, and, therefore, politically volatile.

Only 9% oppose Net Zero, and in fact a majority think we should get there before 2050. This isn't a fad — support has been consistently high going back through multiple Prime Ministers, a cost of living crisis, Covid-19, Brexit, and beyond.

Our data show that 89% would like to make more sustainable choices in their life. But a similar 88% say it's too often too hard because it's too expensive, inconvenient, or confusing. That's why 87% wish leadership from government and businesses was stronger — bold policy, yes, but policy which makes the greener choices easier, more available, more understandable, more affordable.

Public goodwill is there, to a point. Our surveys also show that 61% would be 'willing to switch to a low emission vehicle in the next year' — just what the ULEZ asks of them. 72% would be willing to retrofit their home; 69% to take more public transport, 71% to fly less often, even. But being 'willing', in principle, is not the same as being happy to pay for it, or undergo great personal inconvenience to achieve it. The Mayor of London faces legal requirements on both national carbon emissions, and local air pollution, so I'm not saying there's an easy alternative to the vital ULEZ proposals. But as a matter of principle, climate policy needs to remove the trade-offs for citizens, not impose them.

That's why we need electricity to be cheaper relative to gas (it would favour heat pumps and electric cars — and it's madness that carbon taxes are applied to electricity but not gas). It's why we need new labelling systems so that any of us have a hope in hell of working out which pair of jeans, pack of sausages or pension is the greener one. It's why we need hyper-slick 'one-stop-shops' for household energy efficiency improvements, bundling together attractive finance, advice, home surveys, reliable installers and consumer protections.

It's also why policies like the Clean Heat Market Mechanism (rarely talked about — a point in its favour) have the right approach. A tradeable levy placed on heating manufacturers from 2024, requiring them to meet targets for low-carbon heat pumps vs boiler sales. If they fail to do so, they'll have to charge more for gas boilers to cover this cost, but that's not really the point, because it would be a losing business strategy.

The market will react, and manufacturers will be drawn to all sorts of innovation — in the product, manufacturing techniques, home surveys, consumer protections and guarantees, installation, pricing and marketing. In short, anything and everything that makes us genuinely want a heat pump instead of a boiler because they'll be better and cheaper. A true win-win that helps us adopt the right behaviour, without the difficult trade off.

So let's not back-track. Let's just get better at policy-making.

By Toby Park, Principal Advisor, Head of Energy, Environment & Sustainability, BIT

Sustainability comms 101: Mastering climate communications for green behaviour change

Do we want Net Zero or not? We do, but the UK's ULEZ debacle highlights the political high-wire was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

In his best-selling book on motivation, Drive, Daniel Pink argues that job autonomy is one of three essential ingredients to motivate staff alongside mastery and purpose. Typically, efforts by organisations to encourage autonomy fall under the banner of 'empowerment'.

Research has shown empowering employees by giving them the freedom and resources to make important decisions brings higher levels of job performance and satisfaction. This makes a compelling case for empowering your employees. But how can organisations put it into practice? What actually works?

One obvious avenue is leadership. The idea is that managers are the enablers providing guidance, resources and support for people to be more autonomous. This is necessary, but not sufficient. If staff have strict roles or heavy workloads, will an adjusted leadership style really empower them? Do leaders really understand the day-to-day work of teams across the organisation well enough to know what support they need? If not, why not be genuinely empowering and ask staff to decide what would solve their problems? Why not give employees the time, support and guidance that helps them to help themselves?

Successful empowerment is a combination of giving people the right tools, some protected time and a bit of expert facilitation

Ground up employee empowerment in the Ministry of Defence

Together with the UK Ministry of Defence (MOD), we explored whether a ground-up approach to empowerment can be effectively scaled across a government department. We facilitated 28 teams from across the MOD to identify barriers to empowerment and develop tangible solutions to drive organisational change.

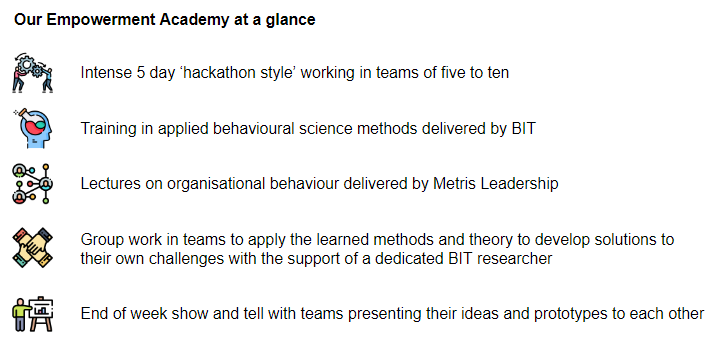

The interventions implemented by the teams optimised processes, culture and policies for more than 100,000 staff members across Defence. We did this by supporting those teams through their own compressed behavioural insights project, which centred around a 5-day-long empowerment academy.

Women only apply when 100% qualified? Putting received wisdom to the test

What solutions did teams come up with?

So what did the teams focus on and what intervention did they develop? Some teams arrived with a problem in mind already, while others ran surveys and focus groups with their colleagues to understand what challenges they faced.

Here are a selection of some of the challenges teams addressed and what they came up with:

Challenge — Roles and responsibilities: knowing who does what. The MOD employs over 250,000 people. Add to this geographically dispersed teams and hybrid working and it's unsurprising that many academy teams found understanding roles and responsibilities across their business a barrier to getting things done.

Solution — My role on a page: To save people time by making relevant information visible, many teams adapted a role on a page intervention BIT previously developed for another MOD team. The one-page document summarises basic information about a person's roles, responsibilities and objectives. Teams linked these one-pagers in their email signatures and in org charts to make them easily accessible.

Challenge — Knowledge management: quick access to the right information. Being aware of what your colleagues can support you with helps but there is more that empowers people to do their job effectively. Lots of teams also struggled to have the right information to hand when they needed it. The problem was usually behavioural, the IT system was there, people just weren't using it the way they were supposed to.

Solution — STOP, STOP, STOP! Day: One team really gripped the problem and developed an innovative digital cleanup workshop as a team-wide solution. During the STOP, STOP, STOP! Day, participants get introduced to and apply best practice techniques to organise their data in a way that ensures shared access. This has already been implemented and evaluations of the first workshops showed that participants find the format useful and value the transfer of skills that empowers them to do their work effectively.

Challenge — Learning and development: clear opportunities to grow. Giving staff access to the right people and the right information makes collaboration easy. Offering them the right learning opportunities will refine their skills to make these collaborations more fruitful. In focus groups, staff voiced their frustrations over complicated and confusing L&D processes, which make accessing the L&D offer difficult.

Solution — Make learning in the MOD Easy, Attractive, Social and Timely: Using BIT's EAST framework, the L&D team developed a plan to make the MOD's L&D offer more accessible and the processes smoother. Shortly after the academy week, the team launched a new L&D hub that incorporated the key functionalities voiced in the focus groups. The team also developed a behaviourally-informed decision map that makes navigating the administrative processes to get approval for L&D courses easier.

Over 90% of participants thought it was likely that they would implement the recommendations they developed. Checking in with them a few weeks after, most of them did or were on track to do so

Bottom-up initiatives address what really matters to employees

The academies proved to be a practical and tangible way to put empowerment into practice at a team level. Following the 5-day academy, over 90% of participants thought it was likely that they would implement the recommendations they developed. Checking in with them a few weeks after, most of them did or were on track to do so. The feedback we received on the format was very positive. Participants valued the uniqueness of the experience, with 9 out of 10 saying they would recommend the program to other teams in the MOD.

In our work with the MOD, we learned that successful empowerment from the bottom-up is not difficult to achieve. It's just a combination of giving people the right tools, some protected time and a bit of expert facilitation. A unique feature of this approach to organisational development is that it's empowering by design. Giving staff ownership over the problems that affect them, and letting them come up with their own solutions was genuinely empowering.

"It gave us the time, space and a framework to think"

– Feedback from an academy participant

If you'd like to find out more about this project and our work on organisational behaviour please reach out to edward.flahavan@bi.team.

By Ed Flahavan, Principal Advisor, Home Affairs and Security & Martin Wessel, Associate Advisor, BIT

High prices, high pay? How to attract and retain staff when inflation is high

Empowering staff from the ground up was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

Net Zero relies on behaviour change. According to the UK's Committee on Climate Change, the majority of future emissions reductions — 63% — will need to come from changes in how we travel, how we power and heat our homes, what we eat, and what we buy.

But, what does that really mean for the day-to-day? What can we actually do to help in the fight to decarbonise? Not such a silly question when you consider that 83% of people in Europe can't identify what green behaviours they need to carry out, or that the UK public really struggle to accurately rank different green actions based on their impact (people actually perform worse than random!).

We're often the first to point out that 'information alone isn't enough to drive big changes in behaviour' (particularly where big cost or convenience barriers exist). But, with such a large shift in public action needed, and a lack of strong public understanding of green behaviours, it's clear that public engagement, information and effective communications are a vital foundation. .

We have been working with the Welsh Government and SBW Advertising to develop 11 evidence-based Principles for Climate Change communications for behaviour change.

11 Principles of Climate Change Communications for Behaviour Change

A comms campaign can serve a variety of purposes: from building trust in Government, to shaping public awareness around green choices and upcoming policies, as well as directly encouraging green actions among the public. Borrowing from the Welsh Government's own "4 E's Framework for Net Zero Public Engagement", comms can be harnessed to Exemplify action (e.g. highlighting what's being done), Engage people with the issue, Enable change (e.g. providing tips or signposting to policy support), and Encourage greener choices with direct calls to action.

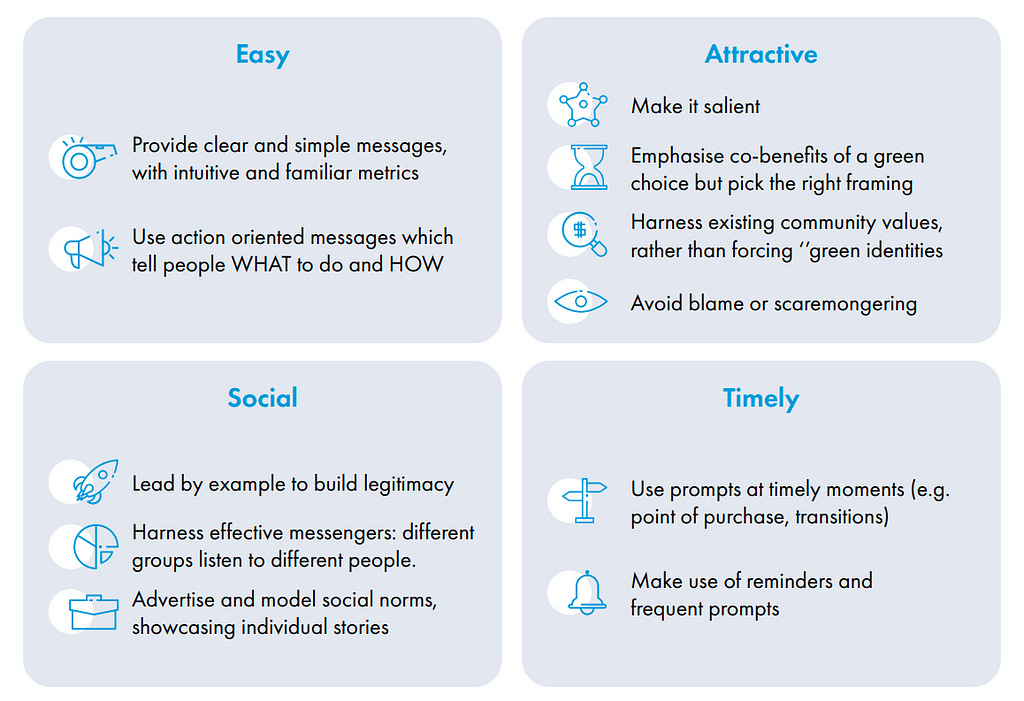

But when using comms, what specifically should you say, and how should you say it? We summarise 11 tips which emerged from a recent evidence review on this topic undertaken for the Welsh Government in partnership with SBW Advertising, organised within BIT's EAST framework.

Below, we bring these principles to life:

EASY

People are confused and overwhelmed about their role in Net Zero — 83% of us are not certain what green actions we should start with. Studies show that making green choices as easy as possible helps people to engage — for example, i) making green energy tariff options the default (thus making the green choice the easiest option to choose) increased uptake ten-fold, and ii) making green diets easy to access (by doubling the availability of veggie meals in canteens) increased vegetarian sales by between 40–80%. So how can we make climate action easier just through comms?

Reframing sustainability through a behavioral lens

#1: Provide clear and simple messages, with intuitive and familiar metrics. We do not have unlimited cognitive bandwidth. We remember simple messages more accurately and are more likely to act correctly if they're clear. Therefore green communications should be short, punchy, and easy to digest. Communicating clearly and simply also involves using metrics that the public can understand — for example, studies have shown that people are more likely to understand information when it is presented with relatable and familiar analogies (e.g. presenting kcal as 'minutes of exercise', or kWh as running cost), or personally relevant examples in messaging that are not psychologically distant (e.g. talk about local impact of climate change: local species decline and flood rather than distant melting ice caps or polar bear extinction).

#2: Use action oriented messages that tell people WHAT to do and HOW. Given the lack of knowledge that people have about i) what green actions to prioritise and ii) how to do them, providing clear calls to action and 'procedural (how to) information' is key. Explain specifically what to do — e.g. visit a webpage; swap driving for the bus; eat less meat and diary, and how — tips, recipes, meal plans. Manchester City's green diets campaign, below, does a good job of the former.

The recent DESNZ campaign showing people how to people to turn down their boiler flow temperature) did a good job of the latter, with step-by-step videos modelling the action.This principle can also be demonstrated by the clear visual labelling on recycling bins (below). It's not just imploring people to recycle more — it's addressing a real knowledge barrier to make the correct action easier (and indeed, data suggest it can increase self-reported recycling knowledge by up to 88%). We're fairly accustomed to seeing this approach with recycling, but it's surprisingly absent among many campaigns telling us to take other vague or complex actions — like switching to a heat pump, or cutting our food waste.

(1) Manchester City Council's green diets campaign, and (2) Recycling labels clearly depicting what can be disposed of.

ATTRACTIVE

When green choices feel desirable and salient, people are more likely to do them. Many green choices are perceived to be quite unattractive, from plant-based meat alternatives that are associated with being less tasty to electric vehicles being perceived as expensive or inconvenient. Others just might not be on our radar against a back-drop of habituated consumption and a busy life. To make green choices feel attractive and visible, your campaign should:

#3: Make it salient and visible. People attune their attention toward stimuli that are salient, unexpected or novel, and so climate communications should be just that. For example, hand-written letters increase response rates by around 20% because they are a relatively novel prompt, just as adding a green stripe on electric vehicle number plates acts as a new prompt for sustainability (see below). Equally, alluring prints on bins significantly increased recycling by up to 25% (e.g. cow-prints) and adding playful green footsteps leading to bins reduced littering in the Darlington UK by over 15%.

(3) Green number plates of EVs in the UK are salient visual indicators of a green choice, (4) Green footsteps in Darlington leading to bin

#4: Emphasise co-benefits of a green choice but pick the right framing. Environmental motivations are not always the main motivations that people have for being green, and emphasising personal co-benefits of a green choice can have a bigger impact. For instance, emphasising the co-benefits of Net Zero increases the amount people are willing to donate to climate protection causes by about 10%. Selecting which co-benefit to advertise can be tricky, and its success will depend on the target behaviour context and target audience.

But, some examples can include emphasising Net Zero around public health benefits, national energy security benefits, economic benefits and green job benefits. Below, we show how the Sustainable Energy Authority of Ireland promotes retrofitting by highlighting the comfort benefits of it, rather than the environmental one. Indeed, further BIT research showed that framing heat pumps around 'heating that works', 'energy security', and increased 'property value' increased people's intentions to buy them more so than messaging about their environmental benefits.

(5) Sustainable Energy Authority of Ireland's retrofitting campaign

#5: Harness existing community values, rather than forcing 'green identities'. Climate communications that speak to people's existing and established values can be very effective, often above those aiming to instil new green values. Namely, public approval and agreement with a policy is increased if it is framed around common values of fairness, morality, impact on community and safety, inter-generational duty, and around the values of avoiding waste and cost saving. The seminal 'Don't Mess with Texas' campaign is a good example of a campaign that harnesses Texan's pride and patriotism to reduce littering.

#6: Avoid blame or scaremongering. Fear, stigma or guilt-based environmental messaging can backfire, as it makes people feel demoralised and avoidant. Although fear messages receive high engagement on social media can be associated with pro-environmental behavioural intent, other studies find that triggering positive emotions (e.g. gratification) is more effective than guilt.

Will green tech save us or must we all change our behaviour?

SOCIAL

With all green choices, people are more likely to behave sustainably if it is perceived as the normal, socially accepted thing to do. For example, when people can see that their neighbours have solar panels they are 44% more likely to get one themselves because it's seen as the norm. How can a comms campaign make green action feel social?

#7: Lead by example to build legitimacy. Strong and credible leadership makes a difference when it comes to communications. Governments should lead by example through their own behaviours, through public procurement, and through long-term policy commitments to build trust and legitimacy. This sends powerful signals about norms and intentions, and can have a meaningful impact on action. This was demonstrated by a Japanese Government communications campaign (CoolBiz), that promoted energy efficient behaviour in offices by the prime minister and members of Cabinet wearing loose-fitting short-sleeved outfits in formal settings to normalise having office air-conditioning on lower settings. This campaign resulted in an estimated 1.4 million tonnes-reduction in CO2 emission since 2005.

#8: Harness effective messengers: different groups listen to different people. We are more responsive to environmental messengers that we trust and that we identify with, so endorsement from key (trusted, credible, expert, and legitimate) messengers is key for Net Zero. Importantly, this varies for different segments of the public. The Government's 'One Step Greener' campaign is an example of a campaign that speaks through a diverse set of (26 different) messengers to reach different demographics across the UK — spanning youth activists to voices for Muslim women and women of colour, and spread across a variety of roles (TV hosts, influences, NHS staff).

#9: Advertise and model social norms. Presenting people with information about positive social norms can significantly increase uptake of green choices or behaviours. For example, communicating to energy customers by comparing their energy use to that of their neighbours can reduce energy consumption by 2%, and prompting recycling with on-pack messages (saying "Most people recycle me") can increase recycling by 7% (see examples below).

(6) Social norm prompting recycling; (7) ASDA refill social norm prompt.

TIMELY

With all green choices, people are more likely to behave sustainably if they are prompted at the right moments. For example, helping people to plan their time makes them 5% more likely to commute to work sustainably, and SMS reminders help increase active travel too. To make a climate communications campaign more timely, you should:

#10: Use prompts at timely moments (e.g. point of purchase, transitions). Prompting people at key moments of a user journey can make a real difference. For example, eco-labels can play a key role at shifting customer action if made salient at the point of purchase. In a BIT experiment ran with DESNZ, prompting people with 'lifetime running cost' of an electrical appliance at the point of purchase increased green choices by up to 14%. Another key moment in which people change their habits and choices are transitions that interrupt their typical patterns of behaviour ('moments of change') and provide a unique window for a re-set (e.g. moving house, when a car breaks down, or when boiler malfunctions). In a BIT trial, communications about a bike-share cycling scheme were 4-times more impactful when delivered to people who had just moved into the area.

#11: Make use of reminders and frequent prompts. Communications campaigns can involve sending reminders via SMS or letter to shift actions. For example, a timely mobile alert asking Californian residents to use less energy to avoid a power black-out helped to reduce energy demand from its peak at 47,357 megawatts by 1.2 gigawatts. BIT also studied the impact of prompting EV purchase on the DVLA website whilst people paid vehicle tax, finding that a timely reminder increased traffic to the Government's Go Ultra Low website. Moreover, the frequency and length of exposure both make a difference — single exposure to environmental messaging is weaker than longer exposure. For instance, farmers viewing more stimulus about sustainable agriculture used up to 60% less fertiliser and pesticides, which damage the environment.

Overall, communications campaigns are a crucial part of the Net Zero puzzle, and public engagement, education and encouragement form key pillars for enabling people to make greener choices. It won't be enough — substantive policy changes are needed to make green choices cheaper, more accessible and more attractive. But comms can even support this too, ensuring policies are framed and communicated effectively, seen as fair, and cut through to the public's awareness so they can access the help on offer.

We hope these 11 principles give you a solid foundation on which to build your own campaigns, but we encourage you to reach out to the Behavioural Insights Team if you are interested in further collaboration on climate communications for behaviour change.

By Toby Park, Principal Advisor, Head of Energy, Environment & Sustainability, Jake Reynolds, Advisor, Environment, Energy & Sustainability & Kristina Londakova, Principal Advisor, BIT

Investors are stuck between climate commitments and action

Sustainability comms 101: Mastering climate communications for green behaviour change was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

A new way to talk through controversy

If you feel like it's harder than it used to be to engage productively with people whose views oppose your own, you are not alone. The United States is a global leader in political polarization, and the problem only appears to be growing.

From the environment to education, few topics escape the rapidly widening partisan divide. The consequences of this are particularly serious for public health, as we've seen through the COVID-19 pandemic. Partisanship influenced stay-at-home orders, social distancing, and vaccination behaviors, which all affected (and continue to affect) mortality rates.

Getting a vaccine or taking medicine should be health decisions, not political ones — but here we are. So what's the best way for state and local governments to positively engage with constituents, and ultimately shift behaviors to benefit public health?

Don't just listen — H.E.A.R.

Conversational receptiveness

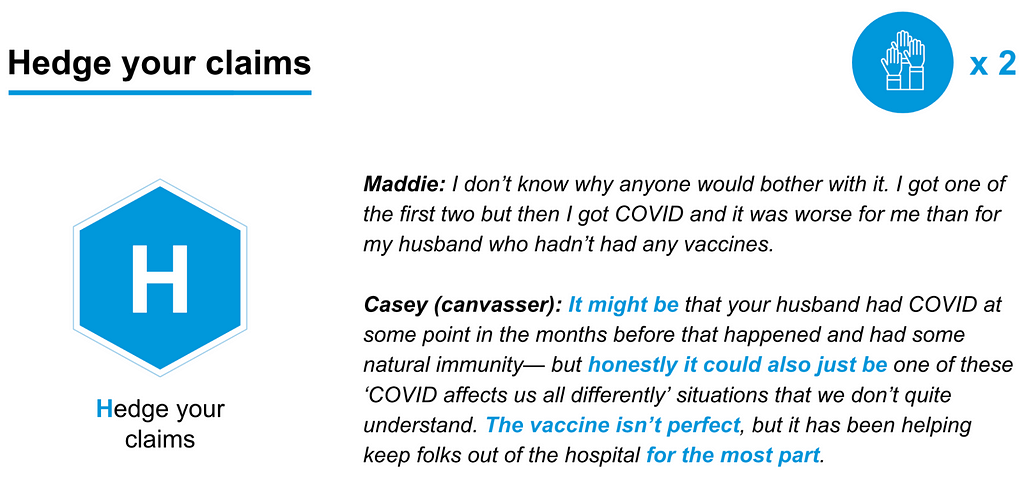

Conversational receptiveness is a new, evidence-based approach with the potential to cool down conversations on heated topics. Grounded in a deep understanding of human behavior, conversational receptiveness (CR) is defined as:

The use of language to communicate your willingness to thoughtfully engage with opposing views, even if the other person strongly disagrees with you, or is not receptive themselves.

The goal of CR is to have a positive conversation where the other person leaves feeling like they were heard. People who use CR are seen as more reasonable and objective, which can encourage the person they were talking to to shift their behavior positively, or at least have a future conversation.

The research behind CR

The key principles of conversational receptiveness were identified and researched by Dr. Julia Minson and her team at Harvard's Kennedy School of Government, Harvard Business School, and Imperial College, London. In a series of four studies, the team trained an algorithm which identified CR's core elements; showed that CR predicts conflict outcomes in the real world; and tested how well people could learn and apply these principles.

The results of the last study found that ideological opponents saw people who used CR principles as more persuasive. Opponents also said that they'd be more likely to collaborate with them in the future — suggesting long-term promise for building trust and cultivating positive sentiment.

How to use CR? Don't just listen — H.E.A.R.

H.E.A.R. is a mnemonic for the four key ways of expressing Conversational Receptiveness. You can use them in conversation to show that you're receptive to the other person's point of view:

- Hedge your claims. Demonstrating humility through hedging (rather than making unequivocal claims) shows that you are aware of the nuance of a topic and have arrived at your position after seriously considering others. Using words like "might," "most," and "somewhat" can help convey this sentiment.

- Emphasize agreement: While the focus of the conversation might be one specific topic, it can be helpful to look for tangential points that you agree on and point them out. For public health, that might look like emphasizing agreement about being concerned for the health of vulnerable family members.

- Acknowledge other perspectives: Paraphrasing what another speaker has said about a particular point can show that you're listening to them carefully and genuinely trying to understand their perspective. It is not enough to use phrases like "I hear you" — you need to show receipts (e.g., "I hear you, that you're worried about your daughter getting the vaccine after your aunt had a reaction").

- Reframe to positive: Framing ideas and messages in a positive way can express that you come in peace. Particularly if you can sense the tone of the interaction becoming adversarial, complimenting something the other person said that impressed you or expressing gratitude for their willingness to even have the conversation can get you back on the same side before you continue.

Using CR to have hard conversations

In March 2023, BIT partnered with the University of Louisiana at Lafayette and the Louisiana Department of Health to train COVID-19 vaccine canvassers in CR principles. We designed the training to help them learn the skills to apply CR to live conversations in order to build trust with community members (especially those who are hesitant or against vaccines).

The secondary goal in teaching canvassers to apply CR in these interactions was to rebuild trust in public health more generally. Early findings show that CR leads to an interest in future collaboration, indicating that it might improve sentiments between disagreeing parties. Even if one CR-infused interaction didn't change a community member's mind on vaccines, it could pave the way for greater confidence in public health in the future.

Over half a day, we trained about 40 canvassers. Participants learned the fundamentals of CR, customized talking points and go-to words and phrases to adapt CR to their own personal style, and practiced using roleplay and feedback sessions.

An example from our in-person workshop for COVID-19 vaccine canvassers.

What's next?

The COVID-19 vaccine canvassers we trained are excited to use CR in their work this summer, as they speak to communities with low vaccination rates about how to protect themselves.

As the body of evidence supporting CR grows, we're excited about its potential to foster positive behavior change around other polarized topics. Its principles could be applied to climate change, voter registration, and more.

By Leah Everist, Senior Advisor, BIT

Deadlocked? Try conversational receptiveness was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

But only when they are designed with an understanding of human behaviour

Digital health technologies, including health apps, have the potential to revolutionise healthcare delivery by improving patient outcomes, reducing costs, increasing efficiency, and making healthcare more accessible.

For health apps to be effective, they must be designed with an understanding of human behaviour so that the digital services, offered by apps or online, are easy to use, embedded in the wider system for scale, such that service users — be they clinicians, patients or administrative staff — use them as intended.

At BIT, we are proposing three ways in which we think behavioural science can make health apps more effective:

- Making sure health apps work for the needs of service users

- Widening the reach of these solutions by testing what comms works best, for whom

- Evaluating apps to ensure they deliver their intended objective/s

Why is digital health important?

Digital health solutions, including health apps, are becoming increasingly important in healthcare services. In the UK the recent Labour Party Mission highlighted their vision for more digital health solutions in the NHS, such as making the NHS App the single front door to all NHS services.

Digital health solutions are technology-based applications that are used to improve healthcare outcomes. This includes (but is not limited to): electronic health records, mobile health apps, telemedicine and wearable devices.

Similarly, digital health solutions play a key role in the NHS Long Term Plan's vision for the future of healthcare delivery in the UK which outlines a 10-year strategy for transforming the NHS.

One of the key aims of the plan is to ensure that all patients have access to digital healthcare services, such as online consultations and remote monitoring, by 2024. The plan also aims to introduce electronic patient records across the NHS by 2024, enabling healthcare professionals to access patient information more easily and quickly.

Simply introducing digital health solutions is not enough to ensure their successful adoption and usage by healthcare professionals and patient

What is the problem?

However, simply introducing digital health solutions is not enough to ensure their successful adoption and usage by healthcare professionals and patients.

This is where behavioural science comes in. Behavioural science is the study of human behaviour, and how it can be influenced by various factors such as social norms, cognitive biases and decision-making processes. By applying insights from behavioural science, digital health solutions can be designed and implemented in a way that maximises their adoption and usage.

For example, there are a number of online health and prescription services that allow patients to arrange appointments with their GP and order repeat prescriptions through an app. However, patients' experiences of digital NHS access are often clunky and fragmented, and uptake of digital options for ordering repeat prescriptions and booking appointments in primary care remains low despite the functionality being widely available.

Successful digital solutions have proven to be a game-changer in the healthcare industry. Not only can they improve the overall patient experience, but they can also reduce healthcare costs.

For example, The Electronic Prescription Service (EPS), which is used in 93% of England's 7,300 GP practices, saved the NHS £136 million between 2013 and 2016. Another successful digital solution is the NHS e-referral service, which now covers every hospital and GP practice and saves the NHS over £50 million annually.

However, it's not just large-scale digital solutions that are making an impact. Smaller solutions, if implemented nationally, can also make a significant difference. In 2018, a maternity app was launched at an NHS trust, which provided real-time access to maternity records for patients.

This solution received very positive feedback, and it was estimated it could potentially save the trust over 400 hours in staff time a year. Extrapolated across the entire UK, this solution could translate into approximately £2 million in savings annually.

Breaking barriers to sexual reproductive health

Our services

To make sure digital health solutions really work, we need to make progress in three key areas. Below we have proposed three specific ways in which behavioural science and BIT specifically can help to make digital health, in particular health apps, more impactful:

- Behavioural Optimisation: Making sure health apps work for the needs of service users. Many health applications are designed for specific user groups. For instance, there are wearable-integrated apps designed to improve rehabilitation for armed forces personnel with injuries. Similarly, a range of apps, from those focused on mental health provision to those focused on illness detection target a specific demographic. BIT can use behavioural science techniques and user group testing to make sure your apps are best designed to meet users' needs.

- Expanding Reach: Nailing the comms; what approach works best? The Behavioural Insights Team are experts in behavioural science, and we know that different versions of the same underlying message can perform very differently, and gain very different outcomes. BIT's online Predictiv platform enables us to test messaging with large numbers of users to identify best performers, for you to include in your products.

- Impact evaluation: Does it deliver its intended objectives? A healthcare app must, at its core, deliver a healthcare benefit. Yet many are unevaluated at best, and at worst, don't deliver the desired benefit. If the future of healthcare is to change and become more technologically driven, then we must be able to establish, rigorously, whether — and to what extent — an app is achieving its intended outcome.

By Dr Helen Brown, Interim Director of Health & Wellbeing, Dr Giulia Tagliaferri, Head of Quantitative Research, Dr Jovita Leung, Advisor & Morgan Griffiths, Senior Advisor, BIT

Using behavioural insights to create a COVID-19 text service for the NHS

Digital apps are game-changers in healthcare was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

We analysed 100 slot game ads — and found dozens of behavioural biases

The ultimate goal of advertising is to influence behaviour. That is, to encourage people to take a particular action.

Like other sectors, the UK gambling industry appears to be trying to entice customers at a massive scale, spending more than £1.5 billion in advertising every year. Existing evidence suggests that this advertising encourages gambling, and may lead to risky behaviour.

But we don't know what specific features or contents of adverts are influencing people's actions and how conscious people are of the risks or implications of their choices. BIT's Gambling Policy & Research Unit has developed a new, rigorous methodology that will help us to answer these questions.

A lack of transparency is a common element

A typical slot game advert

We conducted a content analysis of 100 real online slot game adverts that were live on Facebook and Instagram.

A mock-up of a typical slot game advert (defined by features that were present in >50% of our sample, or the next most prevalent).

We found dozens of design features leveraging behavioural biases across the 100 adverts which could be negatively impacting gambling behaviour. A lack of transparency was a common element, appearing in the form of:

- Misleading depictions of risk: Ads for specific slot games lacked odds information. Most ads highlighted low-risk offers, potential wins, and used unclear language like 'playing' instead of 'betting'

- Minimised important terms & conditions: Prominently featured T&C statements like "No deposit required" overshadowed other important terms like age restrictions and wagering requirements.

To meaningfully reduce harm we have to understand every touchpoint of a person's journey with gambling

Three ways gambling sites can harm their customers

Honing in on features to test

To hone in on what we should test in part 2 of our study, we used existing behavioural science evidence and feedback from the Committee of Advertising Practice. We also consulted our Steering Group, broader network of policymakers, and our GPRU team to shortlist what to test next. The features fall into three groups:

- Salience of free incentives: Adverts offer free spins or other incentives to entice new customers to gamble and distract from other important information such as T&Cs. Furthermore important details like wagering requirements tended to be hidden, making it difficult for people to know the true costs involved.

- Lack of transparency: Many adverts did not include odds information, which made it difficult for people to assess the true risk of gambling. Additionally, some adverts used vague language or focused on the potential wins, which could give people the impression that gambling was a sure thing.

- Misleading depictions:Adverts depict slot games in a way that makes them seem more exciting and profitable than they actually are

We plan to test these features against a "typical" advert and one designed with good practices in mind. Our goal is to understand if these features affect customers' perceptions and behaviour beyond the expected commercial impact of an ad.

What's next

To meaningfully reduce harm, we should understand every touchpoint of a person's journey with gambling — from seeing advertising to interacting with operators themselves.

This methodology has provided us with the findings we need to now design and run an online randomised controlled trial to test specific features. The results will reveal whether these features hold the potential to cause harm, and help shape future policy for the gambling market. In the meantime, you can explore the full report of our slot ad content analysis here.

By Lauren Leak-Smith, Senior Advisor, Esther Hadman, Senior Advisor, Tom van Zantvliet, Associate Advisor, Louis Shaw, Associate Research Advisor & Eleanor Collerton, Advisor, BIT

5 traps to avoid on Black Friday

We analysed 100 slot game ads — and found dozens of behavioural biases was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

In a recent blog I used AI to create pen portraits of UK voters and then generate survey responses about whether or not they supported the recent strikes by junior doctors. The results showed that for certain demographic groups at least, AI can generate pretty accurate survey results.

But beyond just understanding whether or not these AI personas support the strikes, a natural follow-up question is why they have that view? Knowing what underlies someone's opinion would allow strategists to develop communications campaigns to influence them.

In this blog we'll move from getting simple survey answers from the AI personas into focus group-like interactions to try and understand the why. We'll also use this to generate and test out messaging strategies.

I pick up from where I left off in the last blog, so you might want to have a quick read of that before diving in.

Why do they support or not support the strikes? And what might change their mind?

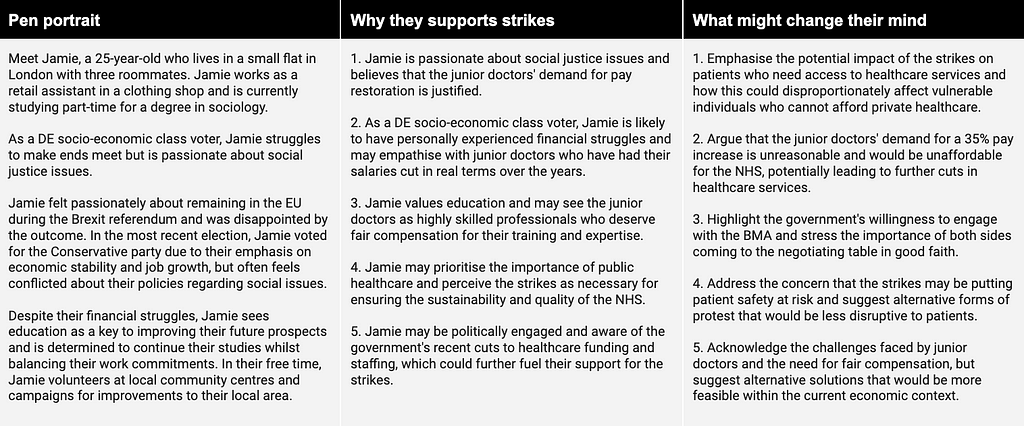

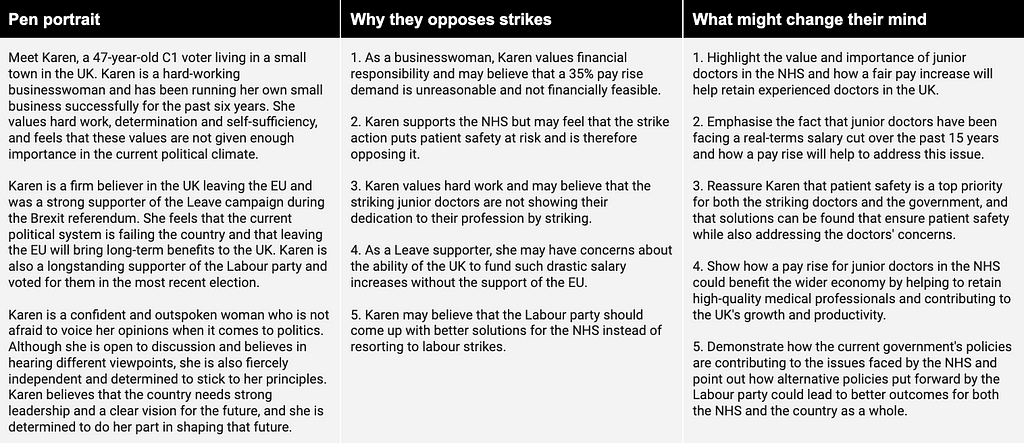

The first step is to understand why the different personas oppose or support the strikes. This is a very simple prompt though the OpenAI API. I simply provide the pen portrait of the voter, the context about the strikes, and whether they support or oppose the strikes. I then ask the AI to give 5 reasons why they might hold that opinion and 5 things that might change their mind.

Here are a couple of examples to show what it comes up with. The reasons generated seem pretty plausible and are consistent with the pen portraits.

First up is Jamie, a young Londoner who supports the strikes…

And here's Karen who opposes them…

Unfortunately, there's no 'ground truth' data to compare this to. I wasn't able to find any information online about why people support or don't support the strikes. I couldn't even find a vox pop with people on the street.

But perhaps that illustrates the potential value of this approach. When there's limited information available, this can improve our understanding of an issue. Or at the very least help us to see an issue from the perspective of different voter groups.

AI director of communications?

So now we have some ideas of what might sway people's opinions. Using that we can let the AI come up with some communications strategies. We can think of this as AI Malcolm Tucker without the swearing.

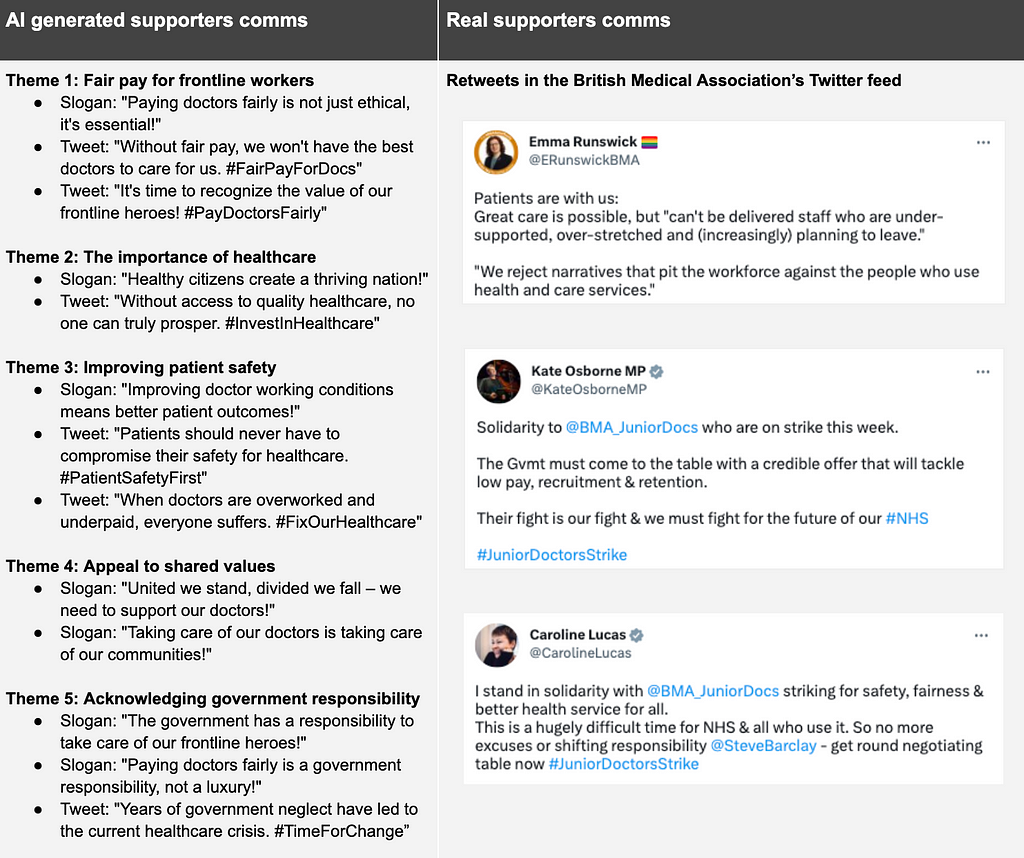

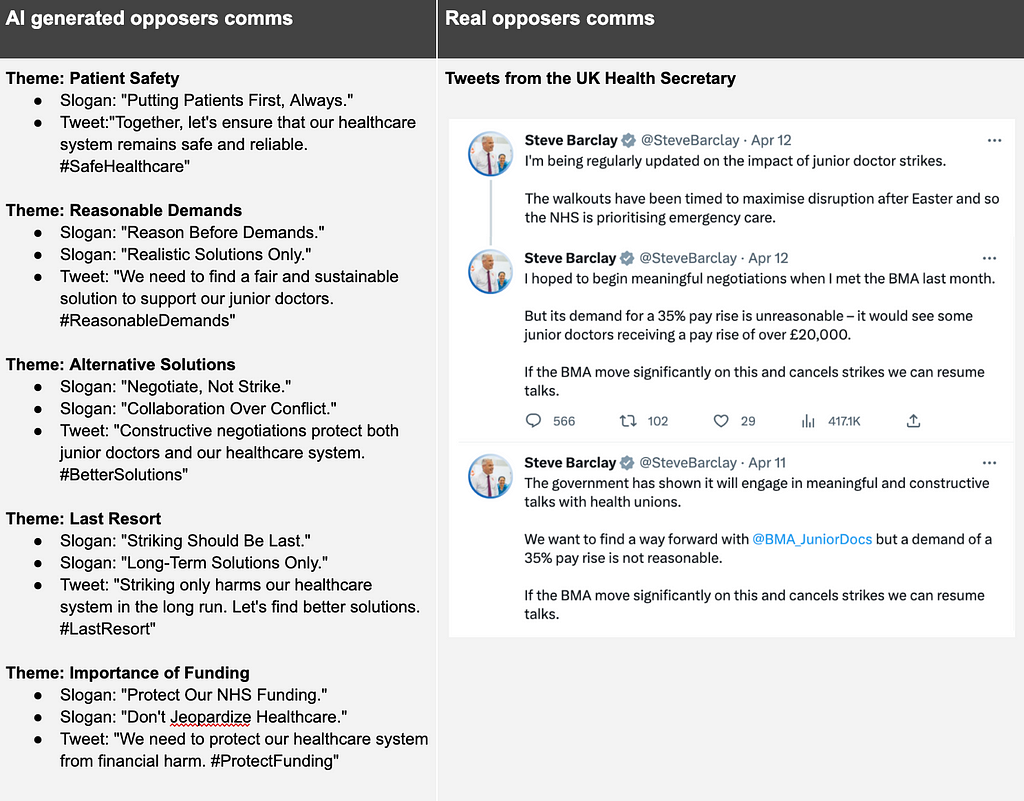

I use a simple prompt and give it some examples of the messages that might change minds from the step above. I ask it to identify the 5 most consistent themes within those and then create some comms messaging based on that, in particular to create some slogans and Tweets for each theme.

Again, there's no ground truth to say whether the messages generated are right or wrong, good or bad. To give something to compare against, I had a scan through the Twitter pages for the British Medical Association (BMA) and the UK Health Secretary (in effect the two sides of this dispute) to see what kind of messages they were putting out at that time.

The tables below show the AI generated communications themes and messages on the left hand side alongside some illustrative real Tweets from the same side of the argument.

For the comms supporting the strikes, there are some consistent themes in the AI and the real messages; needing to pay doctors fairly or we'll lose them, patient safety and shifting the responsibility onto the government.

Comparing the AI generated comms opposing the strikes again we can see some common language; in particular the call for 'reasonable' pay demands and the call for 'constructive' talks.

So we can see that our AI comms strategists have come up with messaging that isn't too far off what both sides are putting out. Perhaps that says less about the sophistication of the AI and more about the simplicity of the real world comms.

It's important to underline that the AI model has a training cutoff in September 2021, so it has no knowledge of these strikes as a live political issue. The only piece of context the model has been given is a single news article. Clearly you could imagine providing the AI with a lot more contextual information to help it create more nuanced messaging and you could also provide it with more guidance on the style of messaging you wanted it to generate.

Pre-testing our messages with the 'public'

So at this point we have some ideas for communications strategies that both sides of the argument could use. But if they can only choose one, which is going to be most effective?

To figure that out, I again went back to the sample of personas. For each persona, I ask the AI to say which of three different comms messages might change their mind.

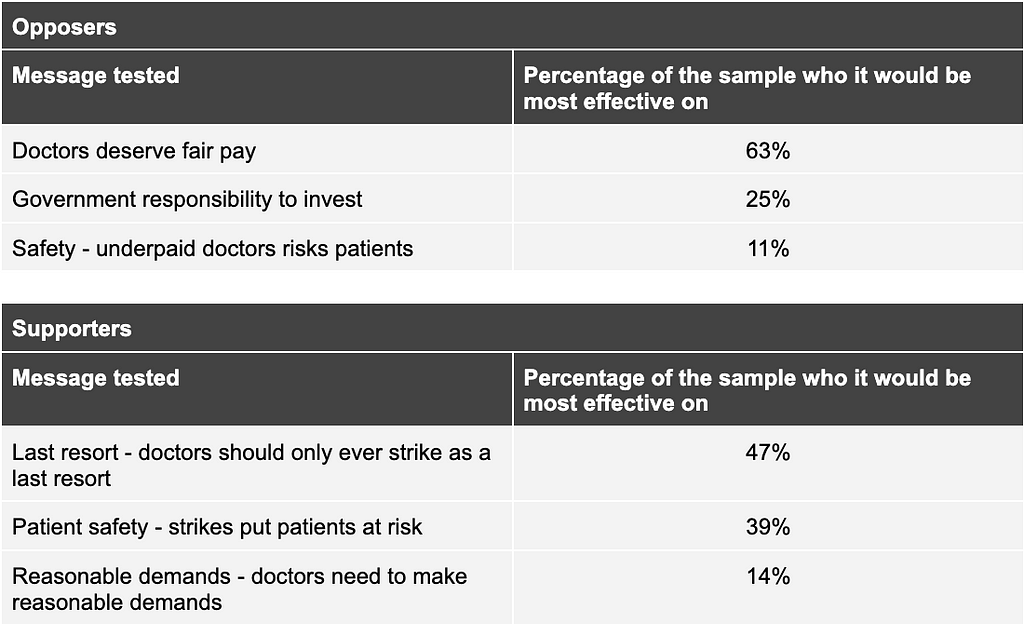

For personas that oppose strikes, I test three counter messages from the five generated by the AI. These were a) Fair pay — that doctors deserve a fair wage, b) Safety — that overworked and underpaid doctors are bad for patient safety and c) Government's responsibility — how the strikes are really the fault of the government for underinvestment.

And for the supporters I again test three counter messages. a) 'Reasonable demands' — how doctors need to make reasonable demands and be realistic about pay, b) 'last resort' — that doctors should only ever strike as a last resort and c) 'patient safety' — that striking doctors are putting patient safety at risk.

The tables below show what the percentage of the sample that each message was selected as most effective for.

So we can see that our message testing suggests that for those that oppose strikes, messaging about doctors deserving fair pay is likely to be most effective.

On the other hand to change the views of those supporting the strikes, the framing should be about how striking should only be a last resort and that it puts patient safety at risk.

Once again, we don't have any data to cross reference the validity of these results. And I suspect the results from this type of testing could be very sensitive to how each of the different messaging strategies is described in the prompt.

Conclusions

So we can see that even limited contextual information an AI simulated focus group can generate reasonable insights into why people from different political perspectives might support or not support the strikes. We were also able to generate and test plausible messaging strategies that echo some of the real messages put forward by both sides.

It's important to stress that unlike the AI survey method in the previous blog, I'm not aware of research that has validated AI qualitative research findings against human research results. I'm someone is already working on that.

So, let's assume there's validity in the methods, what might the application of this be?

- Limited time or money: Don't have the budget for a focus group, or need something tested in the next hour? This could solve that problem.

- Filtering down a long list of messaging strategies: It could also be used to filter a long list of ideas prior to a real focus group. AI personas don't lose patience or get tired if you test 50 different messages with them.

- Hard to reach groups: Some voter groups could be hard to drag to a focus group. This could let you understand their perspective.

- Sensitive focus group content: Imagine you have an embarrassing story that might leak to the press and you want to know how the public might react. Well this could let you gauge the possible fallout without the risk of the story getting out.

Diagnosing Opinions: Using AI to simulate focus groups on Junior Doctor strikes was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

Market confidence is an inherently social concept — and so needs a social approach

Rising interest rates have adversely affected bond yields around the world. Financial Institutions with sizable holdings, accumulated as part of what used to be prudent risk-management strategies in pre-inflation times, saw their portfolio valuations plummet.

Silicon Valley Bank (SVB), the venture capital banker of tech innovators life-science companies, was no exception. Spurred by larger than expected withdrawals initially by clients with primarily non-insured deposits (ie deposits over $250'000), the bank rushed to meet depositor demands for cash, in turn realising further losses. Within a matter of days, the run on the bank was in full swing and despite the unprecedented move by the Fed to protect all deposits, SVB failed and it has since been bought by First Citizens Bank.

Arguably a textbook case of financial contagion, other financial institutions with links to SVB or pre-existing weak performance, soon found themselves in similar difficulties, thanks in part to the the belief that a wider domino effect had been unleashed. This included Signature Bank, a crypto lender (resulting in a takeover by New York Community Bank) and First Republic Bank, a lender to the 'wealthy' (since then provided a $30bn lifeline by a consortium of US banks).

On the other side of the Atlantic, Credit Suisse, marred by a long series of scandals, was unable to stay afloat even with a CHF 54bn lifeline from the Swiss Central Bank, resulting in a subsequent takeover by UBS.

History has shown that it ain't over till it's over. A lot depends on market 'sentiment', ie economic actors' beliefs or attitudes towards specific financial instruments, institutions or entire market segments, in turn influencing their behaviour.

A critical element shaping confidence is the expectation of how others will act. Professor Robert Shiller has argued that confidence can be shaped by popular narratives that propagate through the economy– stories that people use to make sense of the world around them, and which they share with others.

This is related, but separate from the evidence-based assessment of the strength of fundamentals, as shown in the Diamond-Dybvig model. 'Fundamentals' refer to the core economic components of a bank's balance sheets; their assets and liabilities, their ability to borrow or lend.

If everyone merely responded to fundamentals, we would not have a situation where otherwise solvent banks were subject to bank runs — in normal times their (enhanced) capitalisation ratios are more than sufficient to cover short-term depositor calls on their funds. As such, market confidence is an inherently social concept, calling for a social approach.

What can Behavioural Insights offer to policy makers and central bankers faced with the Mammothian task of convincing economic actors to "stay calm" in times of crisis? Drawing on our extensive experience, we offer 3 suggestions that are easy, cheap and fast to implement:

- Pre-testing communications to ensure that they achieve their intended impact

- Correct misperceptions around risk, and

- Invest in ex-ante preparedness through de-biasing forecasting and decision making processes.

Great communication can seem like an art: hard to get right, but you know what good looks like when you see it. However it can also be treated like a science

1. Turn communications from an art into a science

Communications are critical in a crisis. For example, the president of the European Central Bank, Mario Draghi, promised to " do whatever it takes" in the midst of the Euro crisis in 2012. He did not announce a single policy measure, but risk premiums on 10-year bonds of distressed countries like Italy and Spain dropped sharply in response. His speech was credited with drastically reducing spreads on 10-year Eurozone government bonds.

Draghi's speech was targeted at financial markets. But the same is true of communications targeted at the general public, particularly when delivered by a highly credible and powerful messenger. In Shiller's Narrative Economics he describes how in 1933 President Roosevelt directly addressed the American public on the radio in the midst of a bank run, asserting "You people must have faith. You must not be stampeded by rumours or guesses. Let us unite in banishing fear." Roosevelt's personal and direct request was effective: when banks were reopened, money flew into the financial system, as opposed to out.

Great communication can seem like an art: hard to get right, but you know what good looks like when you see it. However it can also be treated like a science. Speeches, announcements, and press releases can be rapidly tested and iterated to get them right before they are released. For instance, at BIT we have worked with governments to test messages to reduce panic buying, and with the Bank of England to test inflation report releases. This can be done rapidly, and at scale with large samples to identify the optimum messaging fast.

As the COVID pandemic unfolded, the key messages for the public evolved from hand-washing to compliance with lockdown restrictions to take-up of testing and vaccinations. Throughout, we conducted rapid testing with tens of thousands of participants, often within the space of days, to optimise government communications. The same approach could be deployed by financial regulators in the face of financial instability and wavering market confidence.

2. Correcting misperceptions around risk

People often understand risk through the lens of emotion rather than probability; this is why we overreact to visceral, 'dread' risks like plane crashes and, unwittingly, are less aware of the impact of risks whose impact accumulates over a longer timeframe, such as smoking cigarettes or even environmental harm.

However, as Spigelhalter's excellent work in the domain of risk has shown, if we give people salient, relevant information on risks, they can update their priors and their behaviour. In this case, the information may be about deposit insurance: the fact that for many citizens, the government already has a scheme in place that protects their deposits.

For instance, in the UK, awareness that the FSCS deposit insurance schemes covers ISAs — popular tax-free savings accounts- is relatively low, potentially leading them to overestimate the risk to their personal finances. Relatedly, individuals are particularly sensitive to perceived scarcity effects and are mostly loss averse. If there is even an inkling of doubt that unless they act now to withdraw their deposits, the bank may run out of money leading to potential losses for them, they will feel compelled to act to protect themselves, thus fulfilling the prophecy.

Boosting awareness of and confidence in deposit protection schemes could have the effect of lowering perceived 'threat appraisal' — to borrow a term from protection motivation theory. Ambiguity on what is covered and what isn't can create more volatility, as demonstrated recently in the US policy debate over whether deposits above $250,000 should be covered.

We routinely 'fight the last war' rather than the next one

3. Focus on anticipation rather than response

Finance Ministers and Central Bankers, as well as their staff are, like all of us, prone to errors in their forecasting and decision-making. A particularly costly one is recency bias, the human tendency to recall influential events that happened most recently, as opposed to those that are further remote (perhaps even before we were born), or indeed conceive of other possibilities that are yet to pass. This effectively means that collectively our policy makers tend to routinely 'fight the last war' rather than the next one.

Add to that the fact that most policy-makers often surround themselves with people who are quite similar to them in terms of education and background (see eg the stats on educational diversity of the IMF and gender and ethnic diversity at the BoE). Although by no means the only factor, some have argued that this lack of diversity and inclusion has weakened those institutions' abilities to conduct comprehensive risk horizon scanning exercises (see for examples IEO 2023 and IEO 2011)

In our Behavioural Government report, we propose a range of strategies to tackle common biases in how risks and problems are noticed, processed and managed. They include the need to assemble teams that are cognitively diverse, introducing mechanisms for challenging underlying assumptions and building flexibility into policy action to allow for more agile, proportionate and appropriate responses.

Such conscious de-biasing of forecasting processes alongside an agreement on response triggers when specific events come to pass can go a long way towards better calibrated policy making during crisis, therefore saving lives and money.

To conclude, we cannot avoid shocks and crises from occurring, whether of a financial, humanitarian or climate-related nature. But we can anticipate and handle these instances better. Behavioural insights focussed on effective communication, correcting risk misperceptions, and de-biasing forecasting and decision-making processes have a key role to play in enabling policy makers and regulators to carry out their policy objectives.

By Eva Myers, Director, Economic Policy, Nida Broughton, Director, Economic Policy, Tom van Zantvliet, Associate Advisor & Alexander Clark, Head of International APAC at BIT

Women only apply when 100% qualified? Putting received wisdom to the test

Market confidence is an inherently social concept — and so needs a social approach was originally published in Behavioural Insights on Medium, where people are continuing the conversation by highlighting and responding to this story.

In a recent blog I explored whether AI simulations of people completing cognitive tasks could replicate human data. Tl;dr: it can.

Since then, I read this thought-provoking paper which shows that simulated AI personas can accurately reproduce political survey data in the US.

I wanted to see if I could replicate this in the UK. My idea was to use demographic data to simulate personas of UK voters that are roughly representative of the population. Then simulate opinion polling of these AI personas on the topic of a current political issue — I used the ongoing strikes by junior doctors.

I used demographic data to simulate personas of UK voters that are roughly representative of the population. I then simulated opinion polling of the AI personas on the topic of the strikes and compare the results to real polling data.

I followed four steps below:

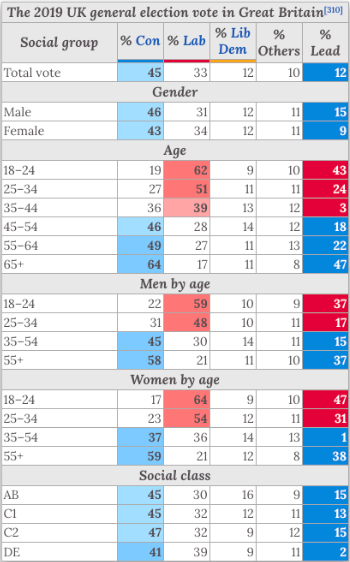

Step 1: Demographic data on UK voters

The most easily available data on UK voters I found came from the Wiki page for the 2019 UK General Election. See snapshot below.

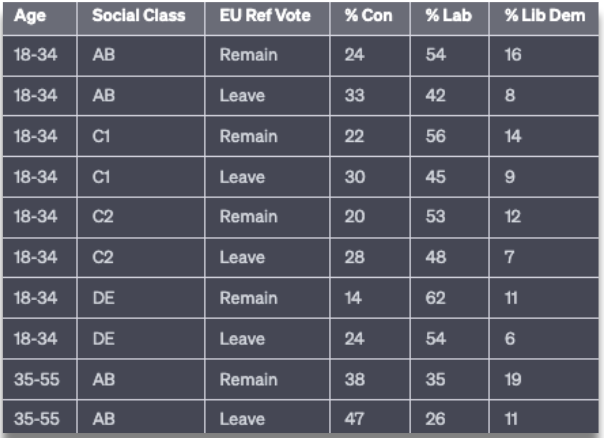

To create a more detailed demographic dataset, I got ChatGPT to review this data and infer a more detailed breakdown of the data (e.g. splitting age group by social class and Brexit vote).

Clearly this is not totally accurate but for this proof of concept analysis it will suffice. The beginning of the ChatGPT's output was as below.

Based on this data, I was able to create a sample of 200 'voters', each with basic demographic information.

Step 2: Simulating AI personas

This is where we use the creative power of the AI. I now have 200 'voters' from the UK population. I then used GPT3.5 to create pen portraits for each of them.

The prompt I developed asked the AI to create a pen portrait based on the following data about a person: age, social class, Brexit vote and party vote in the 2019 general election. I encouraged it to be creative and think about the person's political views and values.

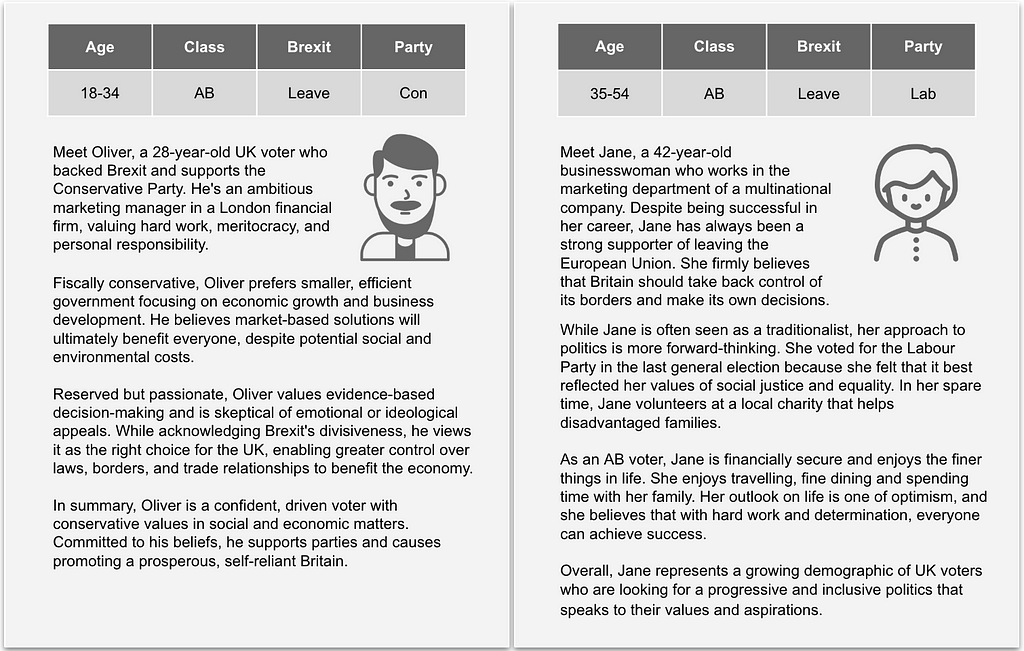

Here are a couple of examples it came up with:

Step 3: Polling the AI personas

I now have 200 personas like those above. Next, I needed a question to ask the 'voters' to see whether we could replicate actual public opinion.

Ipsos recently carried out a poll about public support for strikes by junior doctors — a very topical debate in the UK at the moment. The full data tables published a breakdown of results by demographics, so I could compare these results to my simulated AI poll.

The prompt given to the AI was very simple. I simply asked the AI to read the pen portrait of the voter along with a single recent news article about the doctor strikes. It then had to say whether it thought this voter would 'support', 'oppose' or 'neither support nor oppose' the strikes.

Before jumping into the results, it's important to bear in mind that GPT3.5 has a knowledge cut-off of September 2021. So while it will have information and background knowledge about Brexit and the 2019 general election, it has its limits. As far as it knows, Boris Johnson is still the Prime Minister and there's no cost of living crisis.

Step 4: How did the Simulated AI polling compare to the real thing?

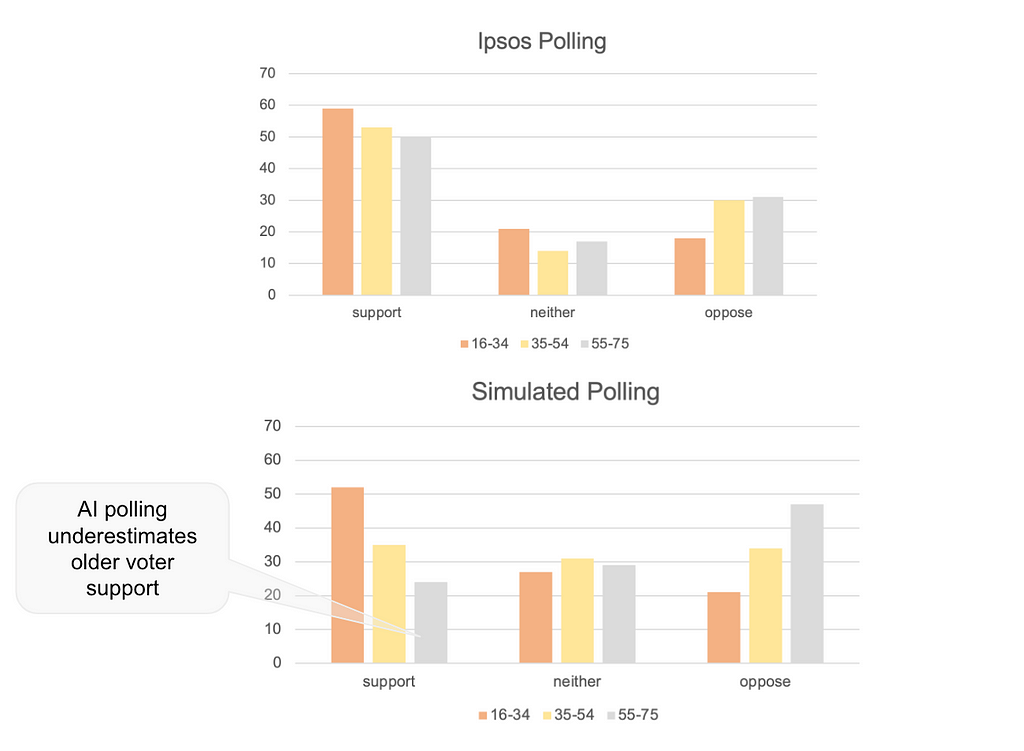

We'll first look at the breakdown by age. The graphs below compare the Ipsos poll to the simulated polling.

For younger voters, the AI sample is pretty close to the real poll. The average difference between answers is 5 percentage points. It's quite a bit worse for middle aged and older voters at 13 and 18 percentage points respectively, but overall still pretty good going!

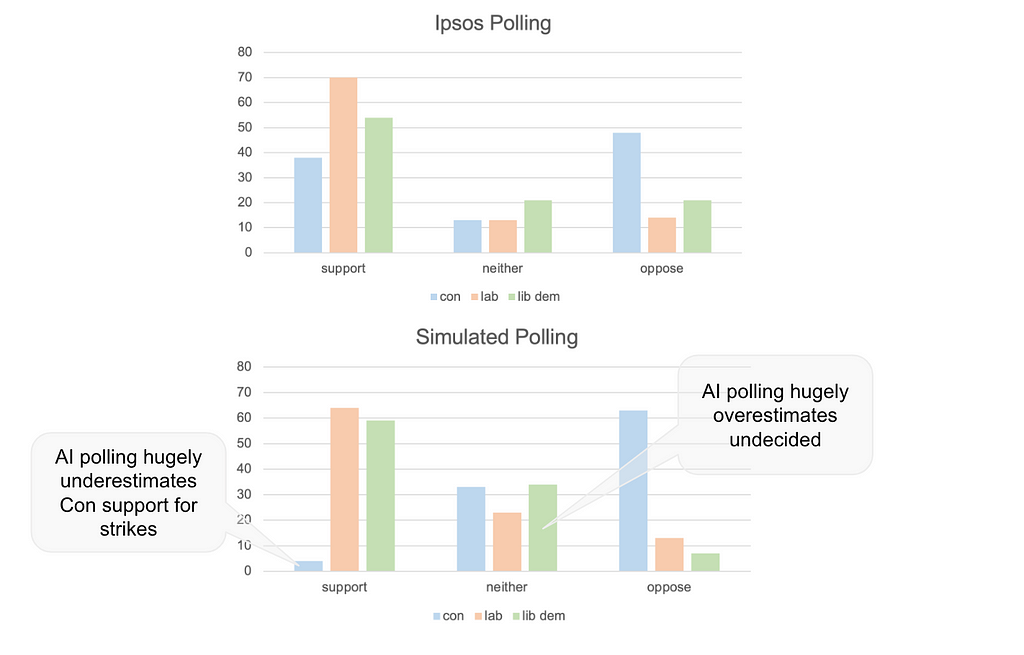

Next, I compared the results split by how people voted in the 2019 election. Again the AI polling is a bit hit and miss. The polling of Labour voters is pretty good — there's just a 6 percentage point difference on average. With the Lib Dems it's a bit worse at 11 percentage points.

But it does a very poor job with Conservative voters, expecting very few of them to support the strikes. The average difference between the actual results and the AI results was 23 percentage points.

Should we be impressed?

On the one hand, the polling results are off by quite a long way for certain groups. We certainly wouldn't consider this an accurate poll.

That said, I found it quite surprising that such a simple approach would at least be in the same ballpark. This might be because attitudes to strikes are 'predictable'. In other words, it shouldn't require a super intelligent AI to know that most Labour voters will be supportive of a strike. So, it might not perform as well on a more subtle political issue.

I've no doubt that the performance of the AI polling could be improved considerably in a few ways:

- Use more accurate underlying demographic data than I used here and use a larger sample of 'voters'.

- Create more nuanced pen portraits. For example telling the AI the type of media they consume (here's a recent paper doing just that). The pen portraits I created focussed heavily on people's political views and voting record. This might have led the AI to think that everyone was a political partisan.

- Include more detailed context. Here I just provided a single news article about the strikes. More information about the issue and more background information about the political and economic climate might improve it.

- Training the model. The more time intensive process would be to create a large model of these personas and train them on the polling results from multiple surveys until it can accurately replicate historical surveys. This is absolutely doable with more time.

- Use a better AI large language model. I used GPT3.5 since I don't have GPT4 API access. I'm sure a better model would give better results.

Where could AI-based polling go next?